This morning, Apple updated the MacBook Pro line with new internal components, including new processors, new graphics chips, a higher-resolution screen option on the 15” (finally), and bigger batteries.

This is usually significant news worthy of a homepage takeover for a week. But today, as Marc noted, the Apple homepage looks like this:

The new notebooks are relegated to a small promo unit along the bottom of the huge iPad graphic.

The message is clear: Macs aren’t Apple’s focus right now. This will be sad news to a lot of people, but I’m quite happy about it.

Computers are boring.

New applications can improve our lives. New form factors can be revolutionary. New networks and services can increase communication and enrich relationships. But the steady progress of CPU power and storage in personal computers doesn’t do much for me anymore. Today’s MacBook Pro encodes video faster than yesterday’s MacBook Pro, but how many people encode video? Today’s MacBook Pro plays high-end 3D games faster than yesterday’s MacBook Pro, but how many Apple buyers play high-end 3D games?

By comparison, to how many people might the iPad — a brand new form factor with a brand new OS, new interface paradigms, and new applications — be relevant and exciting?

Mac OS X 10.6, Snow Leopard, was released last fall. Nearly everything I do on my computer was unaffected by its changes in a user-noticeable way. There were very few compelling reasons for most people to upgrade.

My Mac Pro (admittedly a ridiculously awesome computer) is two years old. I have absolutely no reason to buy a new one as long as it keeps working. The Mac Pro lineup will be updated to the 6-core Westmere-series Xeons any day now, but I don’t need them, because my computer is fast enough already. And if I start hitting speed bottlenecks in the next year or two, they’re more likely to be alleviated by upgrading to SSDs, not replacing the whole computer.

If your CPU power dropped by 75% for an hour every day, how long would it take you to notice?

How much of what you do on your $1500–3000 computer could be accomplished on a $500 iPad?

John Gruber, last week:

Mac OS X 10.7 development continues, but with a reduced team and an unknown schedule.

I read this and wasn’t disappointed or scared at all. I’m very happy with 10.6. I was very happy with 10.5.

What do you want out of 10.7?

I use desktop computers for many hours every day. They are my profession, my hobby, and my leisure. But the pace of their software innovation that’s relevant to my everyday use has dramatically slowed. It’s not a bad thing. On the contrary, it’s great that I don’t need to constantly update and upgrade everything to maintain a stable, full-featured computing environment. This is what mature, stable products and industries are like. They work, and they’re built on decades of progress, but modern advances are infrequent and incremental.

Mobile and tablet computing are immature and unstable. Major revolutions still occur frequently. We still haven’t figured out how this stuff all works or what we can do with it. Mobile computing just started. Mobile computing is, today, where portable computing was in 1983.

Desk computers1 aren’t going away anytime soon. I’d be surprised if they went away during our lifetimes.

A desk computer is a general-purpose tool. That’s what makes them so great: they can do a massive variety of tasks, even if they weren’t designed to do them when they were made. People will always need them.

But specialized tools will continue to eat away subsets of what desk computers do, and now that desk computers have reached long-term maturity, the specialized tools are far more interesting to most people.

◆

Most news outlets, including TV news shows and networks, newspapers, news websites, and blogs are targeted at news junkies: they never want to miss a story, and they want to be the first to report it to you.

If you look back on these stories even one week later, the majority of them seem unimportant or redundant in retrospect. And if you stop consuming the firehose for a few days or more, you’re lost — there are very few publications that give a general overview of what has happened, especially when venturing outside of mainstream front-page news and into a subsection, such as technology news.

I want last week’s news, but only what I need to know, and only if it has proven to have relevance beyond the day it was published.

Tell me an overview of what happened in a given field, like technology, but with the hindsight of a week to sleep on it and evaluate what really matters. The delay also provides more time for investigation and analysis to reduce blind speculation, promote thoughtful writing, and encourage big-picture perspective.

Don’t give me any “breaking” information or up-to-the-minute stories. Mentioning any event that happened less than 7 days ago is strictly prohibited.

The result would likely have far less content than most news sites. Some weeks may not have any content at all. And that’s OK.

Someday, maybe I’ll start this site for technology news. But I bet I’ll never get to it, and I’d love for someone else to beat me to it.

(Previous idea: Logarithmic calendar view.)

◆

I’ve been sitting on this idea forever, but the chances that I’ll ever do anything with it are close enough to zero that I’m letting it go. (It’s not original, either, but it has yet to make it into a widespread calendar product.)

The basic premise is obvious: Calendar software overdoes the metaphor and carries too much baggage from its physical-object predecessor.

I find myself always keeping my calendars in “month” view, since most weeks only have a few items. (I work the same schedule every weekday and I rarely meet with people.)

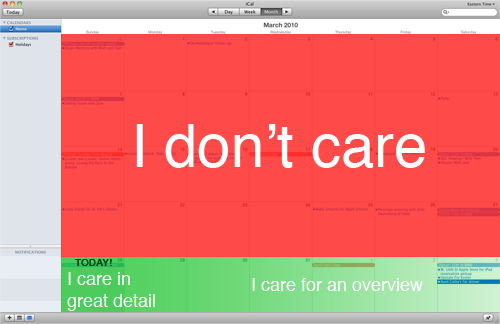

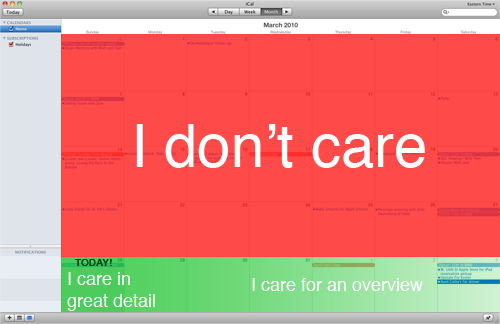

The problem is obvious when it’s near the end of a month, like today:

(The same problem applies to the Day and Week views at the end of their intervals.)

There are two problems here:

- I don’t care about the past. It can be hidden in a separate view for the rare occasions that I want to look at past items. Yet the past is consuming the majority of the interface.

- I don’t care about present-and-future items with equal granularity. I wouldn’t mind seeing today in an hour-by-hour view, but I don’t need the same granularity when showing events three days from now.

- If I switch to a more granular view for today, I lose the ability to see any of what’s happening next week.

The ideal view1 would contain today’s events in great detail, then events from the next few days in less detail, then an overview of events in the next 3-5 weeks.

Bonus idea

The same problems, with the same potential solution, apply to driving directions and navigation screens.

◆

We’re often told that we should design our websites and software to mimic real-life objects. The iPhone strengthened this idiom, and Apple has been driving this home hard for the iPad.

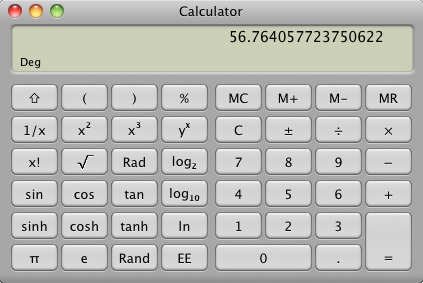

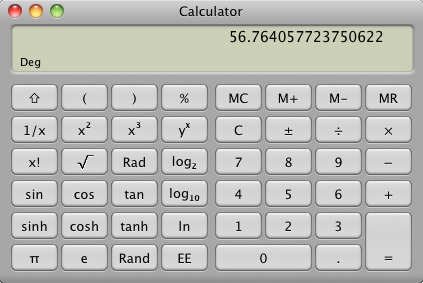

But it’s not absolute, and it’s not always the best idea. My favorite counterexample is the typical calculator app:

Nearly everything about a real calculator is faithfully reproduced, but with the good comes the bad: nearly every limitation and frustration has also been reproduced. There’s very little reason to use the software facsimile over its real-world equivalent, and in some ways, the physical object is better.

Despite being faithfully designed to look and work like a real-world object, the Calculator app hasn’t made any progress. It hasn’t advanced technology. It hasn’t made anything more useful or created new interaction models.

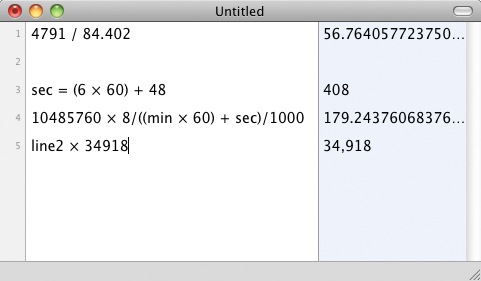

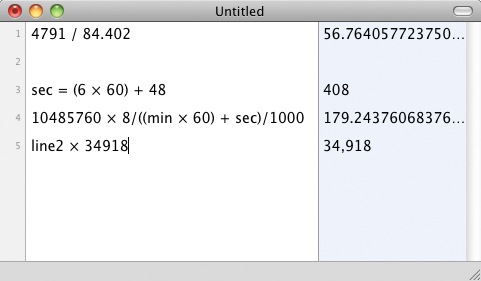

My preferred calculator, which I will keep blogging about until it’s ubiquitous, wasn’t designed against any physical objects because there’s no physical equivalent to what it does.

Please ignore the two glaring errors I made while cobbling this together for the picture.

Functionally, it’s almost a calculator. But it’s also almost a spreadsheet and almost a list pad. By not constraining its design to that of a common physical object, it’s able to be and do much more than anything in the physical world ever could.

It does a much better job of a number of critical features than the Calculator app, such as multipart calculations, parentheses, editing existing values, and dynamic value references. Even trivial operations are so much nicer that Soulver converts rarely even open Calculator (or use one), preferring instead to keep a Soulver window open somewhere as a scratch pad.

The interface paradigm of mimicking real-world objects shouldn’t, therefore, be applied universally.

So last week, when good writers (1 2 3 4) started discussing the merits of emulating page-turning, I took notice. Especially since I added pagination to Instapaper Pro 2.2 and had to make some difficult decisions in the process. There was no question in my mind that it was better for reading than scrolling — even better than my semi-automated, low-effort tilt scrolling.

But I didn’t implement it because books have pages and lack scrolling. Books aren’t even the right physical-object equivalent for Instapaper. Not all reading happens in books.

Instapaper is more like a magazine than anything else, but I’m not about to try to reproduce the soggy, wrinkled covers from being shoved in the mailbox, the perfume samples, the ten-page “continued on” jumps in the middle of articles, or the subscription cards falling out as you’re trying to read.

(The iPad version of Instapaper that I’ve made so far, incidentally, doesn’t resemble any physical objects. I haven’t shoved huge newspaper or book graphics in there in a misguided effort to win an ADA. Just as Soulver looks like nothing but Soulver, Instapaper on iPad just looks like Instapaper.)

I implemented pagination because it improves reading, not because a related physical item separates text into pages.

Improving the product, not faithfully reproducing the physical object, always gets priority. I passed on a long, complex page-turning animation because it didn’t make sense (you’re paging up/down, not left/right) and it would have been distracting. And I opted for an extremely brief cross-fade, rather than a slide, because slides take longer and are more visually jarring.

DVD players don’t make fake whirring noises for five minutes before letting you eject a disc to simulate rewinding. Similarly, nobody should need to perform a full-width swipe gesture and wait two seconds for their fake page to turn in their fake book1, and nobody should need to click the fake Clear button and start their calculation over because their fake calculator only has a one-line, non-editable fake LCD.

It’s important to find the balance between real-world reproduction and usability progress. Physical objects often do things in certain ways for good reasons, and we should try to preserve them. But much of the time, they’re done in those ways because of physical, technical, economic, or practical limitations that don’t need to apply anymore.

◆

A popular blog truncated its RSS feeds to boost site pageviews. It’s like last week, when The Atlantic changed to partial-content RSS feeds. And that was like every other week, when some publisher did something that some readers didn’t like to make a few more cents.

I dislike the intrusive advertising on Salon, so I don’t read Salon. I dislike Michael Arrington, so I never read anything on TechCrunch (even when they write about me or my products) and have taken technical measures to ensure that I never even land there accidentally and give them whatever tiny profit that one pageview is worth. I don’t like the timebombed, Unicode-breaking Clickability print-friendly view for New York Magazine, since I like reading NYMag-length pieces in Instapaper and Clickability doesn’t work well in it, so I just don’t read NYMag’s articles. I don’t like Ars Technica’s paginated articles, but since I don’t want to pay for a subscription, I just read every page separately, give them all of their separate-page ad views, and save each page to Instapaper if I want to read them that way.

One reaction I’ve never had is to think that I deserve anything from these publishers.

- Valid point: [Publisher] should consider doing it some other way because this will alienate some readers.

- Invalid point: [Publisher] should do it my way because all content deserves to be free/ad-free/full-RSS/single-page.

I see a staggering amount of entitlement every day in the form of arguments and blog posts like the latter.

We don’t deserve anything. Publishers can do whatever they want. If you don’t like it, don’t send them nasty emails or browse their sites with ad-blockers: just don’t support them. Don’t read their content, don’t link to them, and don’t talk about them. Since money’s not usually involved, vote with your attention and read elsewhere.

◆

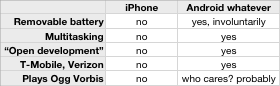

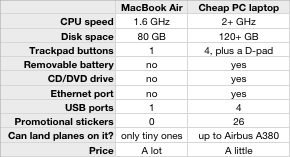

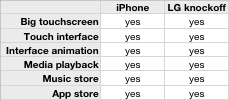

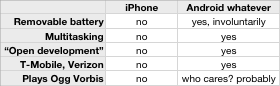

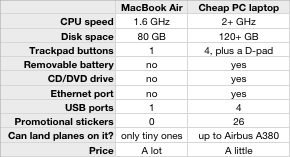

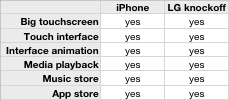

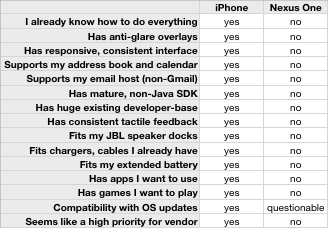

The tech press loves checklist comparisons. Let’s evaluate the iPhone to see whether it’s a good product:

Sounds like a terrible product. I bet it will fail.

Remember the MacBook Air’s launch?

Sounds like there’s no reason to buy one. (Like nearly everyone else, I complained about all of this when the Air launched. We all do it sometimes.) But it’s been very successful, especially in its later revisions, and the SSD models are great machines for people who travel a lot.

So it bothers me when either of two common failures occur.

Assumed equality

This is when a competitor advertises (and often, truly believes) that their product is at least equivalent to another one because it has checkboxes in many similar categories.

Since the iPhone’s launch, every other phone manufacturer has made competing phones with 3” touchscreens, music playback, and square app icons arranged in a 4x4 grid. (Well, except Microsoft’s hilarious interpretation.)

It’s as if the product managers commanded their engineering teams to come up with lists of the iPhone’s “features” and copy them so their phones would sell as well as the iPhone.

Every few months, the copy-list gets longer. Everyone just finished checking off their App Store box and is wondering when the developers are going to rush in.

Miscomparison

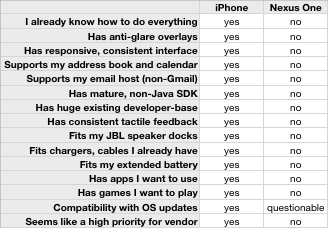

This happens when a geek or manager makes a list of features to compare two products and comes to an oversimplified conclusion based on which one has more checkmarks in its column.

The main problem is obvious: how do you determine which features go on the list? It can’t possibly be exhaustive enough to represent the entire experience of using the products, and it won’t be the same list for everyone.

Here’s why I decided not to use a Nexus One (or any other Android phone):

The Nexus One may be the better choice for people who care about what it does well, like synchronizing with Google’s services. But I don’t care about those things, and I do care about a lot of factors that the iPhone is a better fit for.

It would be ignorant and arrogant for me to presume that your priorities are anything like mine.

◆

Take a boring photo with your 50mm f/1.8 prime wide open, with a small sliver of your subject in focus. Leave large portions of the subject outside of the focal plane, regardless of how important or interesting they are.

Rotate the camera 30 degrees before shooting.

Square-crop.

Oversaturate or slightly desaturate. Tint red to look old. Under no circumstances should you apply a neutral white balance.

Blow out the highlights.

Add a very strong fake vignette.

Finish it off with a fake Polaroid frame.

For bonus points, overlay a pithy, emotional sentence, preferably about a failed romance. Ideally, the overlay should be in white Helvetica.

You’ll be popular in no time.

◆

I haven’t written about the supposed Apple tablet yet because nobody knows anything (including whether it even exists), so everyone’s just talking out of their asses about it.

Normally, I’m perfectly willing to join in and endlessly talk out of my ass about this sort of thing, but I honestly don’t have much to say on it. Nothing I can imagine about “the Tablet” gets me particularly excited. Considering one of its roles as an ebook-reader competitor is interesting, but Apple would never go with e-ink, so the Tablet wouldn’t be as pleasant for long reading as my Kindle.

But John Gruber’s predictions about the device’s role are intriguing:

And so in answer to my central question, regarding why buy The Tablet if you already have an iPhone and a MacBook, my best guess is that ultimately, The Tablet is something you’ll buy instead of a MacBook.

[…]

The Tablet, I say, is going to be Apple’s new answer to what you use for personal portable general computing.

Desktops can use fast, cheap, power-hungry, high-capacity hardware and present your applications on giant screens. They can have lots of ports, accept lots of peripherals, and perform any possible computing role. Their interface is a keyboard and mouse, a desk, and a chair. They’re always internet-connected, they’re always plugged in, they always have their printers and scanners and other peripherals connected, and their in-use ergonomics can be excellent. But you can only use desktops when you’re at those desks.

iPhones use slow, low-capacity, ultra-low-power hardware on a tiny screen with almost no ports and very few compatible peripherals. They can do only a small (albeit useful) subset of general computing roles. They are poorly suited to text input of significant length, such as writing documents or composing nontrivial emails, or tasks requiring a mix of frequent, precise navigation and typing, such as editing a spreadsheet or writing code. But they’re always in your pocket, ready to be whipped out at any time for quick use, even if you’re standing, walking, riding in a vehicle, eating, or waiting on a line at the bank. You can carry one with you in nearly any circumstances without noticing its size or weight.

Laptops are a strange, inefficient tradeoff between an iPhone’s portability and a desktop’s capabilities. They don’t satisfy either need extremely well, but they’re much closer to desktops than they are to iPhones. The usefulness and portability gap between a laptop and an iPhone is staggeringly vast (1:00). You don’t have them with you most of the time, they’re big and heavy (even the MacBook Air weighs 10 times as much and consumes about 10 times as much space as an iPhone 3GS), and they can only be practically used while sitting down (or standing at a tall ledge). Ergonomics are awful unless you effectively turn them into desktops with stands and external peripherals. But they can do nearly any computing task that desktops can do, and they’re able to replace desktops for many people.

Many devices (real, vapor, and theoretical) have tried to fill that vast portability gap between laptops and iPhones (even back when they were called PDAs and they didn’t have voice or wireless data capabilities and nobody bought them except rich people and geeks like me). Historically, this has never succeeded in a way that’s even close to mass-market penetration, including impressively forgettable eras as the “palmtop” computer and the Tablet PC.

Slate-type devices, like the thank-God-it-was-canned Smart Display and the it-might-be-released-now-but-who-cares JooJoo, have the limitations of keyboard-less design that make long text entry or complex editing impractical. But their screens are too large to comfortably use for frequent touch input. And they’re too big to fit in a pocket.

Tiny-keyboarded devices, like the thank-God-it-was-also-canned Palm Foleo and nearly every netbook, haven’t proven to be useful to most people because they’re simply smaller laptops, replicating nearly every laptop flaw while forcing compromises on laptop functionality. The keyboards are too small to be anywhere near as useful as a desktop’s or laptop’s, and the devices are far too large to be pocketed.

(I have no idea what the Microsoft Surface is for.)

The text-input mechanism seems to be the big hurdle required to bridge this portability-and-usefulness gap. So far, nobody has nailed it.

I don’t know what Apple has in mind for the Tablet, but they nailed it with the iPhone: after decades of clunky, awkward, mediocre pocket computers, I think it’s safe to say that the large touchscreen is the best input mechanism for them.

But the decision isn’t nearly as clear for a slate-type device with a 7-10” screen, which most people assume to be the Tablet’s form factor. There doesn’t seem to be a good solution. No device in this category has ever even been close to good. This — and Jobs’ toilet comment, as mentioned in John’s article — is what kept me doubting the Tablet’s existence for so long. But even the most conservative intelligence-gathering indicates that the Tablet project is extremely likely to be not only real, but close to release. (Although the late-January event seems a bit soon and could plausibly be for other products, like iLife.)

I see two possible outcomes: either Apple has come up with a radical new input method for this form-factor that will overcome the fundamental problems that made every other similar device suck, or the Tablet isn’t this form-factor.

(Sure, there’s a third possibility: that Apple is repeating the mistakes of similar products and making their own JooJoo or Foleo. But that’s too unlikely — and stupid — for me to take seriously.)

Given that the reliable information we have to go on is… absolutely nothing, either outcome seems equally likely. I predict the new-input-method solution. I have doubts that such a product could be as much of a replacement for general-purpose portable computing as John predicts, but I’m wrong a lot.

I’ve learned never to say that Apple can’t or won’t do something simply because it’s a significant technical or design challenge.

And while I’m talking out of my ass about this like everyone else, “iSlate” is a stupid name. I’ll predict that the product’s name won’t contain “tablet”, “slate”, or the “i” prefix.

◆

My content will be stolen and republished in ways that violate my extremely permissive Creative Commons license. This will be done by both bots and humans. The bots will use my content to steal pennies from advertisers and time from people. Some of the humans won’t realize they’re doing anything wrong. The others think I won’t notice.

People will misquote, re-title, and edit my content to make it more sensational, at the expense of my credibility and their readers’ trust, in an effort to increase pageviews to their own site, like Business Insider, or increase their rank or reputation on someone else’s site, like Hacker News.

People will misread and misunderstand my content, usually because they’re inattentively skimming it for trigger phrases and concepts that confirm or inflame their own biases.

This will incite many of them to leave misguided, poorly written, ad-hominem comments on every site that republishes or links to my content. Most of the commenters will only read the (edited, sensationalized) title before commenting. They’ll insult my intelligence, call me names, tell me I suck, and refute arguments I didn’t make. Many of them will email these comments to me to make sure I see them.

But I still write.

Because amid all of the spam, fraud, and nastiness, people are reading what I write. Some even send positive feedback or valid counterarguments.

But most importantly, I’m freely expressing my ideas in public, which helps me clarify my thoughts, enhance and alter my views, and improve my writing over time.

I think I’m getting the better end of the deal.

◆

I don’t even know what to say about Merlin’s post. I’m honored. Beyond honored. Speechless. (But you know that I don’t stay speechless for long.)

Normally, when someone publicly says something so nice, most people wouldn’t call attention to it. It’s considered arrogant or immodest. But I think it would be more rude for me to pretend that he didn’t write it or that I didn’t see it or that I wasn’t completely honored by it. And I’d like to expand on the other point he’s making.

If you don’t like hearing two people each talking about how great the other is, you should probably skip this post. Go ahead, scroll past — I won’t take offense. Come back next week to read my latest incorrect Apple predictions. There! Some modesty. But really, my predictions are hilariously bad. Check out this gem on my old site, written three months before the iPhone’s unveiling.

Merlin’s too modest to tell you a few additional details about the story. The Kindle feature, for instance, was his idea. He emailed me last spring, asking very nicely if I’d consider implementing it. When Merlin Mann gives you an idea, you take it, because it’s probably a very good idea. And it was. (Unfortunately, getting the auto-delivery through Amazon’s transfer service is so unreliable that I’m about to release a replacement that’s slightly more manual but 100% reliable. But that’s an implementation detail.)

Back to the point: We’re all in this together. It’s an entire ecosystem. And that’s why Merlin’s article isn’t just about me. (I’m already embarrassed enough to be featured so prominently in the title — when I read it, while painfully waiting for the article’s body to load over my slow cellular connection on the train, I thought I was getting in trouble for something.)

The bigger message isn’t that everyone should write bookmarklets or attempt to send automated emails to Amazon’s Kindle conversion service, but that they should choose to consume (and, if you can, produce) high-quality work in their preferred medium. Mine, and Merlin’s, is text.

I can’t understate the importance of this demand. Instapaper is useless without the two key ingredients that it was created to bring together: high-quality text content and people who choose to read it. And neither ingredient can exist without the other.

I often hear people defending their “guilty pleasure” habit of subscribing to awful blogs or reading tabloids or watching bad TV with phrases like “It’s good sometimes” or “It’s not that bad” or “I have to follow what’s happening.”

There’s only so much time in the day, and only so many days in our lives. There’s enough great work out there that you don’t need to waste any time with anything that isn’t great.

Do you really need to subscribe to that collection of RSS feeds that cumulatively publish hundreds of items per day? If you currently do (I’ve been there), do you really need to read every headline? Exercise: Don’t open your feed reader for a week. Did you miss anything?

Do you watch TV because it’s there, or because you really want to be watching that show? Exercise: Cancel your cable service and just get the really great shows from Netflix or iTunes. When you’re out of shows for a few days and you have some free time, do anything else. You’ll save a bundle of money that you can spend on anything else. Sign up for cable again when you really think it’s worth the time and money. (For most people who try this, that day never comes.)

I can’t give you advice on how to be a better producer. Merlin’s the guy for that. But I’ll do my best to convince you to be a better consumer.

Instapaper is one small part of that. Give Me Something To Read is another.

I’ll even now offer you my hosts-file target IP that I’ve been using. It’s on an old server that I need to operate for a while. Just map any domain you never want to accidentally visit (like if an RSS item or bit.ly link sends you there without warning) to 66.135.33.106 in your hosts file. Here’s an example from mine:

66.135.33.106 www.techcrunch.com

Where technology can’t be your guard rail, start enforcing a higher standard with self-control. Do you really need to watch that show or eat at that fast-food restaurant or spend twenty seconds of every day skimming that blog’s headlines? Is it really a net gain?

Life’s too short to drink bad coffee or read bad blogs.

Make the effort to care.

◆

Marc and Clint are defending ebook readers from the categorical criticism and doubt in blogs over the last few days, sparked by remarks by John Gruber and Jason Kottke.

I got a Kindle 2 in February, mostly so I could make Instapaper work well on it. I expected it to be used mostly as a development device that I would occasionally use to read a book.

The following week, Tiff was packing lightly for air travel, and I made her take the Kindle instead of a handful of books. She thought it was weird and unnecessary, but she semi-reluctantly tried it for the trip.

I just got it back a few weeks ago.

Tiff plowed through more than 20 books on the Kindle. At one point in the middle, she read a book on paper (because it wasn’t available on the Kindle) and absolutely hated it. Her commentary was priceless: she couldn’t easily look up word definitions, she couldn’t change the font size, it was awkward and lopsided to hold near the beginning and end, and it would lose her place if she fell asleep while reading.

Most people won’t instantly jump to buy ebook readers after seeing them in TV commercials or liveblogged keynotes. They need to be experienced in person. (The ability to do this easily will give Barnes & Noble a huge advantage over Amazon.) And they’ll spread via good, old-fashioned, in-person referrals from friends and coworkers.

“Oh, is that the book reader thing? I heard about that… How do you like it? Can I see it?”

And how many Kindle owners have you met who didn’t love it?

This isn’t a recipe for explosive growth. They’re not taking over or killing anything. And techies don’t need to care much for them to succeed. Engadget and Gizmodo can keep obsessing over tiny LCD devices and foldable Acer tablet concepts and are safe to completely ignore this market once it’s no longer shiny and novel. But there are a lot of people — including, significantly, most people over age 40 — who don’t like reading tiny text on bright LCD screens in devices loaded with distractions that die after 5 hours without their electric lifeline.

And this is one 27-year-old with 20/20 vision (for now) who also prefers it.

Most of Kottke’s problem with ebook readers can be solved in software:

But all these e-readers — the Kindle, Nook, Sony Reader, et al — are all focused on the wrong single use: books. (And in the case of at least the Nook and Kindle, the focus is on buying books from B&N and Amazon. The Kindle is more like a 7-Eleven than a book.) The correct single use is reading. Your device should make it equally easy to read books, magazine articles, newspapers, web sites, RSS feeds, PDFs, etc.

And I’ve already solved part of that. Despite making an iPhone app optimized for reading magazine-length text, I mostly read long content with my very beta Kindle-export feature (which sucks, and is about to be replaced with a much better version) because it’s so much more comfortable — the e-ink screen really is much easier on the eyes, and much more text fits on the Kindle’s screen than the iPhone’s. (If the rumor consensus is to be believed, the Apple tablet unicorn will only solve the latter problem.)

Writing off an entire category of devices because of easily improved software limitations is invalid and unwise. I love reading on my Kindle, and I hardly ever read books. I’ll do my part to make blog posts, online magazine articles, and news stories just as easy to read as books.

I don’t expect the ebook-reader market to be the next hot thing. But it’s also not a fad, and it’s not going away. These are great devices for reading, even if you need to use one before you’re convinced, and any objection to their current software limitations is likely to be temporary.

Addendum on feed-reading and PDFs

I’m not including RSS feeds or PDFs in the discussion. RSS feeds aren’t reading: they’re alerting, discovering and filtering. My preferred workflow, which Instapaper embodies, places RSS-inbox-clearing entirely before the reading step as its own process that’s always done with high speed using a native feed reader on a regular computer.

For a variety of technical and practical reasons, I don’t consider PDFs to be a good reading experience on any platform. It’s also not possible to universally transform them well, or even acceptably, to any screen smaller than their intended print size: letter-sized paper, usually. The Kindle DX comes close, but it’s a large, specialized device that’s not as well suited for the mass market as ebook readers with screens in the 6” range.

There’s also always going to be a subset of web and book content that doesn’t work well on ebook readers, such as content with a lot of tables, diagrams, photos, or embedded source code blocks. This matters to some, but lack of good support for this type of content won’t prevent the category from being generally successful.

◆

From John Gruber’s article about Windows 7 adoption:

The truth is that no one really knows why Vista fared so poorly in the market. It defies a simple explanation.

I’ll try to provide one: Most people had no reason to upgrade, and plenty of reasons not to. I’d guess that Gruber’s theory stated earlier in the article is spot on:

What if the reason why most PCs are still running XP has nothing to do with whether Vista is “good” or “bad”, but rather is the result of indifference on the part of whoever owns these untold millions of XP machines, be they at home or in a corporate IT environment. I.e., that switching to Vista, regardless of Vista’s merits, seemed like too much work and too much new stuff to learn; that the nature of the PC as a universal commodity is such that most of them belong to people who value “old and familiar” more than “new and improved but therefore different”.

I think this explains Vista’s poor adoption, but as part of a much bigger problem: the lack of a reason for regular people to ever upgrade to any new OS release.

Our industry has collectively taught average people over the last few decades that computers should be feared and are always a single misstep from breaking. We’ve trained them to expect the working state to be fragile and temporary, and experience from previous upgrades has convinced them that they shouldn’t mess with anything if it works. They’ve learned to ignore our pressures to always get the latest versions of everything because our upgrades frequently break their software and workflow. They expect unreliable functionality, shoddy software workmanship, unnecessary complexity, broken promises from software marketers, and degrading hostility from their office’s IT staff.

When we tell them that the new OS is faster and better, only to have the upgrade break a piece of software that we don’t care about but they really do, we burn our likelihood that they’ll ever willingly upgrade again. Every time we tell them that they can now easily edit video or make DVDs, only to have them abandon their first effort in frustration and never attempt it again because our software sucks, we drive them closer to indifference or resentment toward future technology.

So when our nontechnical aunts refuse to upgrade from their Pentium III PCs with Windows 98 that work well enough for them and are set up exactly how they prefer, we have nobody to blame but ourselves.

The upgrade market for average PC owners is dead. We killed it.

It was never very strong to begin with, because most people don’t know or care about OS updates, as Gruber pointed out. They get the new OS whenever they buy their next computer, because we don’t give them a choice.

What’s driving computer sales? Well, in the ’80s and ’90s, we were making huge hardware advances that affected a large portion of normal people. Every few years, people needed to upgrade their computers for a new killer app or a massively improved generation of their existing apps.

But the rate of such changes that are relevant to average people has plummeted in the last decade. Graphical interfaces, multitasking, SimCity, porn, email, shopping, and dating sold a lot more new computers than nearly anything we’ve come up with since 2000 except malware. (I honestly believe that malware carried computer sales for most of the last decade. That only worked because we’ve taught people, with a combination of misinformation and omission, two great lies: that computers slow down over time, and that the only way to fix a malware infestation is to buy a new computer.)

Hardware, software, and the internet have all reached mature plateaus of dramatically slowed innovation. In 1998, when everyone was happily using long filenames and browsing the internet and playing their first MP3s and editing their first scanned photos to email to their relatives, a five-year-old computer couldn’t easily do any of these things.

But what common tasks in 2009 can’t be accomplished by a 2.8 GHz Pentium 4 PC with Windows XP SP2 and a cable internet connection — the average technology of 2004? Not much that regular people actually do.

We’re still burning their trust, time, and money, but we’re offering much less in return. It shouldn’t surprise any of us that they stopped caring.

◆

Earlier this week, all data stored on T-Mobile Sidekick devices, including contacts, calendars, messages, and photos, had almost certainly been lost in a major infrastructure disaster by Microsoft. Fortunately, Microsoft is now claiming that “most, if not all” of the user data will be restored. But the Sidekick products and T-Mobile have suffered irreparable reputation damage.

It’s easy to jump on Microsoft about this, but I can’t fault them entirely. They absolutely should have had offline, offsite backups. But major outages and data loss happen all the time. Our industry is based on incredibly complex and interwoven systems that shuffle massive amounts of data around, frequently hitting physical and practical storage, bandwidth, and performance limits that need to be worked around in ways that necessarily add complexity, dependencies, and potential failure scenarios.

The real problem is the Sidekick’s design, as I learned from TidBITS’ take on the disaster:

Unlike many smartphones, Danger-based phones store data in a cloud - servers located hither and yon that you don’t manage, but are imagined to be universally and continuously accessible. These phones retrieve information as necessary and cache a temporary copy on the phone, a copy that’s not intended to be a permanent set of stored records. The data also isn’t intended to be synced to a computer as with a BlackBerry, iPhone, Android, or other smartphone, but accessed via a Web site or a phone.

It sounds more like the Sidekick data lives on something akin to an IMAP server: you can have some local copies, but the server is master. With IMAP, the copies persist even after a computer or phone restarts, of course, just as you might expect. But in the Danger approach, locally cached data is erased on restart or if the battery runs out of power.

This design, not a failed SAN upgrade with no backups, is the most severe flaw and most negligent mistake here. It didn’t technically cause the Sidekick disaster, but it dramatically increased its severity from an inconvenient service outage to a complete loss of all customers’ data.

The “cloud” — hosted, centrally-managed services — cannot be your only copy of data. Just as RAID is not its own backup, cloud services are not inherently backed up, although they usually make every effort to maintain data integrity and regular backups. But even when done well, that only accommodates for a subset of loss scenarios. For example, if someone gains access to your account and “legitimately” (as far as the service is concerned) deletes your data, or a botched sync operation unhelpfully synchronizes a mass deletion across all sync clients, cloud infrastructure probably can’t help you. Even if they have offline backups, the chances of them accessing them just to get your old files from an isolated incident are slim.

You aren’t in control of your data if you can’t easily and frequently make useful backups onto your own computer and your own media.

I recognize that it’s hypocritical for me to say this as the lead developer of Tumblr, which does not yet offer an automated feature for users to download backups of their blog content. So I took some time this week and started to write one. I’m happy to announce that Tumblr will be releasing an easy backup tool in the coming weeks. (I will also make an easy backup feature for Instapaper shortly.)

All of my blog’s content, with images, is less than 200 MB. A list of my entire Instapaper reading history is less than 1 MB. The sum of my contacts and calendar data, synced by MobileMe, is probably less than 5 MB. That’s nothing, and given how much time I’ve put into the creation of all of this data, and that it would only consume a third of a $0.26 Taiyo Yuden CD-R (or less than 5% of a $0.45 TY DVD+R), it’s embarrassing that offline backups onto my own media haven’t become routine.

So I’m hereby starting the trend of backing up my hosted data just as carefully, completely, and frequently as my local files. I know this won’t spread to most people, because most people don’t care. But I certainly do, and if you’ve made it this far into this post, you probably do, too.

If my data suddenly and permanently disappears from a hosted service, it should only be an inconvenience, not a loss.

◆

Ged Maheux of the Iconfactory recently wrote Losing iReligion, in which he criticizes the business prospects of the App Store. He cites some general concerns, but much of the post is based on the unfortunate commercial failure of their Ramp Champ game.

By Ged’s account, Ramp Champ was developed with a sizable budget and marketing push (not relative to most games, but relative to most iPhone apps):

When the Iconfactory & DS Media Labs released our latest iPhone game, Ramp Champ, we knew that we had to try and maximize exposure of the application at launch. We poured hundreds of hours into the game’s development and pulled out all the stops to not only make it beautiful and fun, but also something Apple would be proud to feature in the App Store. We designed an attractive website for the game, showed it to as many high-profile bloggers as we could prior to launch and made sure in-app purchases were compelling and affordable.

Subjectively, I remember seeing massive build-up for it from the people I follow online. It was clearly a major effort. But it has apparently failed:

The lack of store front exposure combined with a sporadic 3G crashing bug conspired to keep Ramp Champ down for the count.

… Ramp Champ’s sales have not lived up to expectations for either the Iconfactory or DS Media Labs. What’s worse, many of the future plans for the game (network play, online score boards, frequent add-on pack releases) are all in jeopardy because of the simple fact that Ramp Champ hasn’t returned on its investment.

Its sales could have been disappointing for any number of reasons. Maybe people just didn’t like the gameplay, which presents carnival Skee-Ball-style ball-rolling, target-knocking-down action with extremely strong, detailed graphics and atmosphere. But, as Ged points out, Freeverse’s Skee-Ball has been successful, and it’s a very similar type of game.

Simplistic gameplay doesn’t prevent iPhone games from being successful — in fact, it usually helps. One of the most popular recent games is Paper Toss, a game so simple and mindless that watching someone play it on the subway made me lose much faith in humanity.

What happened? As usual, I have a theory: there are two App Stores.

App Store A: Simple, shallow games and apps with mass-market appeal. These live and die by the App Store’s “Top” lists, so success is difficult to achieve and is short-lived at best, but with the largest potential payoff for the lucky few at the top. These apps are developed quickly and cheaply, and are rarely updated once their initial popularity (if any) dies down. Very few are priced above $0.99. Impulse-buying is king, with most purchases happening on the phone itself, and most buyers don’t know or cares whether you’re an established developer unless your name begins with “MLB”. Nearly every best-selling app falls into this category.

App Store B: Apps and games with more complexity and depth, narrower appeal, longer development cycles, and developer maintenance over the long term. These tend to get little attention from the “Top” lists, instead relying on the much-lower-volume App Store features (e.g. “Staff Picks”), blogs, reviews, and word of mouth. More of their customers notice and demand great design and polish. More sales come from people who have heard of your product first and seek it out by name. Many of these apps are priced above $0.99. These are unlikely to have giant bursts of sales, and hardly any will come close to matching the revenue of the high-profile success stories, but they have a much greater chance of building sustained, long-term income. Due to the likely lower revenue cap, these are usually developed on small budgets by individuals who can do most or all of the work themselves.

These two stores exist in completely different ecosystems with completely different requirements, priorities, and best practices.

But they’re not two different stores (“Are you getting it?”). There’s just one App Store at a casual glance, but if you misunderstand which of these segments you’re targeting, you’ll have a very hard time getting anywhere.

The Iconfactory seems entirely set up for producing excellent apps for App Store B. Their most well-known app, Twitterrific, is solidly in that camp. The problem with Ramp Champ is that they targeted App Store A in gameplay depth and type (which may not have been intentional), and budgeted for App Store A’s expected sales for a hit. But they built, designed, and promoted it as if they were targeting App Store B.

Freeverse’s Skee-Ball game targeted App Store A, but in a way that’s more likely to appeal to its more simplistic, impulse-oriented demands, starting with the name and icon:

Skee-Ball is a registered trademark that the Iconfactory didn’t license (Freeverse did), so the Iconfactory couldn’t call theirs by that name, make the game look anything like it, or even mention it anywhere in the description. But that’s how most people know this sort of game.

App Store A’s customers don’t read our blogs. They don’t see the professional reviews. They also don’t see the Staff Picks section in the store because it’s not in the phone version. Your only chance to get these customers is with the “Top” and category lists, so you need to get them quickly and efficiently. When they breezed past “Ramp Champ” in the low-information list view, they probably couldn’t tell what it was.

The primary screenshots of each game also show a clear difference for people who did select either app for more information:

Skee-Ball is immediately recognizable, well-known, and obvious. But Ramp Champ looks likely to lose out on nearly every impulse purchase from people who don’t want to spend much time looking into it — which is nearly every buyer for App Store A.

The Iconfactory’s apps are able to compete strongly when people choose apps based on research, reviews, or feature comparisons. But that’s not how App Store A’s customers operate. Whether Ramp Champ is a better game than Skee-Ball is irrelevant to them because they’ll never take the time to find out.

Many of Ged’s complaints — in fact, many of everyone’s complaints relating to App Store economics, promotion, and ranking — only apply to App Store A. He’s right to condemn the practical economics of it and question whether to invest significant resources into it again.

But App Store B is doing fine. It’s remarkably stable. This is the store I live in with Instapaper and happily share with many extremely talented developers, including Loren, James, Adam and Cameron, Jeff and Garrett, and — unquestionably — the Iconfactory.

We enjoy great advantages by targeting App Store B:

- Customers are much less price-sensitive.

- Mainstream press is much more likely to review our apps.

- We don’t need to care about our ranks in the “Top” lists.

- Our App Store user reviews and star ratings are much less relevant.

- Promotion in the App Store is a happy bonus if it happens, not a necessity that we depend on.

- Income is much more steady. It occasionally spikes upward with promotion, but the decline afterward is slow and predictable.

- Since we can predict a reasonably accurate revenue minimum for at least a few months, we can afford to issue updates indefinitely, invest in new functionality, and polish the hell out of our product.

There are also, of course, some disadvantages:

- Apps are easy to position here, but games are much more difficult. Games tend to have much higher initial-investment requirements and can’t command as high of a price. But they can much more easily charge for upgrades, and people burn through them more quickly and therefore demand more of them.

- It’s extremely difficult for anyone with employees, or any individuals without other income, to afford to be here. Some people can make enough to cover full-time expenses, but most can’t. (Although I’d say the same thing about the entire App Store, really — at least in our section, we don’t delude ourselves into thinking we’ll be the next Flight Control.)

Personally, I would never trade this for the hit-driven, high-risk, quick-flip requirements of the mass market, even if it means that I’ll never be profiled in Newsweek for making hundreds of thousands of dollars in a few months with the App Store.

◆

I ride the New York subway every day, and I see a lot of trendy gadgets. New York consumers tend to adopt portable technology early and are much less price-sensitive than most of the country. (Our cost of living is so much higher that nationally constant prices are comparatively low — $300 was two weeks’ rent in Pittsburgh, but that would only buy a few days in most New York apartments, so a gadget that costs $300 is comparatively less expensive here relative to incomes and the other costs in our lives.)

Today, John Gruber said about this Palm Pre poor-sales speculation:

Anecdotally, I haven’t seen a single Pre in use in real life.

The subway is a great sample of the generally upper segment of the market. Here’s roughly what I’ve seen since the Pre’s release:

- One Pre. A friend bought one. She’s the only person I know with one.

- A lot of iPhones. On any given train, there will be at least three in sight — and I can usually only see about a third of the car. There are probably many more in pockets and bags.

- Frequent iPod Touches. About one for every 10 iPhones.

- A lot of BlackBerries with their owners either reading email or playing Breakout. (Really. That’s all they do.) BlackBerries are approximately tied with iPhones but are slowly losing ground.

- An occasional dumbphone, but less frequently than the iPod Touch. These are probably underrepresented because their owners have little reason to take them out on the subway.

- Almost no recognizable Windows Mobile devices. The Motorola Q and similar Windows Smartphone (non-touchscreen) devices were very popular in 2006-2007 but quickly fell off the planet.

- No non-Pre devices from Palm. Treo usage vanished over the last two years.

- About one smartphone per day that’s isn’t an iPhone, BlackBerry, Palm, or Windows device — usually one of those tacky T-Mobile things.

- iPod Nano: about one for every 5 iPhones, and the owner is usually watching a movie. (Otherwise they wouldn’t have it out.)

- iPod Classic: about one per day, and the owner is usually playing Solitaire.

- iPod Shuffle: about one per day that’s visibly clipped somewhere.

- Non-iPod, unrecognizable music players: about one per day.

- About one first-generation Kindle per week.

- 3-5 Kindle 2s per day, and increasing fairly rapidly. Only a few months ago, it was 0-2 per day.

- Zero Kindle DXes so far.

- One Sony Reader.

- About one PSP every two weeks, and one Nintendo DS every month.

- One Zune.

Among the iPhone OS devices, many people have multiple pages of apps. I assumed that most casual iPhone users would stick to the default set, but that hasn’t been prevalent.

The most frequent activities on iPhone OS devices are, in this order:

- Listening to music

- Using Mail (iPhones only)

- Playing a game (casual, non-action games have clear dominance, such as Solitaire and Sudoku)

- Watching video (usually a popular, recognizable TV show or movie)

- Using Stanza or Kindle (I can’t often tell the difference)

- Using another non-game app

(I still haven’t spotted someone using Instapaper, but some coworkers have. That’s why I keep looking to see what everyone’s doing on their iPhones. Hey, at least I’m honest about my vanity searches.)

Some interesting conclusions I can draw from this admittedly unscientific, imprecise, and limited sample:

- The Kindle 2 is really catching on. The price drop may explain the boost in recent months. But given that New York subway riders have always had disproportionately high newspaper and book readership relative to most Americans, I wouldn’t consider this to be nationally representative.

- The iPhone and iPod Touch are serious portable gaming platforms for average people who would probably never have bought a dedicated portable game system.

- Breakout seems to be the only game on BlackBerries.

Most importantly, it’s very clear that Windows and Palm aren’t in this game anymore.

◆

A lot of people seem to have constant issues with their phones, computers, printers, hard drives, and other technology breaking or failing.

None of my technology ever breaks at the rate that I see from other people.

Even though it’s a bit presumptuous, my best guess is that I just treat my electronics better than most people.

Part of it is my background. I didn’t grow up in a rich family. I didn’t have a computer until the sixth grade. With the exception of the occasional Christmas present, I needed to buy all of my technology with my own money, even when I made $4.25/hour stocking shelves at a small grocery store at age 15. In high school, I paid $40/month of my own money for cable internet service. I didn’t have a cellular phone or a laptop until I graduated from college in 2004. Since it was difficult and expensive for me to get these things, I treated them extremely well, and I continue to treat all of my belongings with the same respect today.

I don’t put rubbery cases around my phone or foam sleeves around my laptop because I don’t drop them. I just don’t. I’ve also never spilled a drink on any of my stuff. (Although if I ever did drop my laptop or spill a drink on it, it’s insured, and yours should be, too.)

I’ve never broken or scratched a screen. I’ve always kept PDAs and iPhones in a dedicated pocket with the screen facing inward. The back of my Palm Vx had minor dents from having run into a few table corners, but the screen was perfect — that’s why you should keep your screened devices facing into your leg/butt, not toward the world.

I’ve only ever had two hard drives die, and they were the infamous IBM “Deathstar” 60GXP model that, along with the 75GXP before it, had record-setting failure rates and destroyed IBM’s reputation in the hard-drive business.

After two years of bringing it back and forth to work every day and being heavily used as my only computer, my PowerBook G4 didn’t have a single scuff, scratch, dent, or worn-away area anywhere on it. I never got the keyboard-on-screen imprint because I kept it in a backpack in an arrangement that only put pressure on its bottom, not its screen lid.

Nearly every computer I’ve had hasn’t even suffered from critical component failure before it’s comically obsolete or I stop using it. My sister was using my handed-down 2001 PC for the last few years until something finally prevented it from booting a few months ago.

I’ve never lost my phone. (Or my keys, or my wallet.) Not even temporarily. I always know where it is, because I only ever put it in a handful of spots. Is it in my left-front pocket? No? Then it’s on my desk during the day or my nightstand at night.

When I hear people complaining that their iPhone screen cracked or their aluminum laptop is dented or they left their BlackBerry in a taxi, I can’t help but silently blame them and be glad that I’m more attentive and careful with my belongings.

◆

Do you ever get tired of blogging the same old debunkment of every wildly unlikely, off-base, and misleading Apple rumor report every time a cheap rumor site writes about them to get a bunch of pageviews so they can earn 63 cents from AdSense?

Sometimes, I do.

There’s not much to say about the Apple tablet rumors except that there have always been Apple tablet rumors and nothing has ever come of them. Except that inexpensive portable touch-screen media computer with near-ubiquitous internet connectivity that we already have in our pockets because Apple released it two years ago and many of us are already on our second or third one. But nearly every rumor prior to its announcement was completely wrong. (Ask Kevin Rose for a summary if you’ve forgotten.)

I’m sure Apple is working on something really cool. Apple is always working on cool things. Most of them never get beyond prototypes. Some become real products — hopefully, only the best. The concept of a cheap tablet computer is so problematic, as we know it, that I can’t see Apple wanting to release one.

The biggest indicator that it’s not worth their trouble is to look around at who’s requesting it, anticipating it, and assuming it’s on the roadmap: Tech geeks and “analysts”. (Quick aside on analysts: It’s a hilariously corrupt business full of payola and bullshitters. But back to geeks.)

Tech geeks are terrible at knowing what they want from technology. (A faster horse.) It’s embarrassing, because we’re supposed to be the experts. But we suck at this. If you listen to geeks, you get products targeted at geeks, usually at the tremendous exclusion of design, usability, marketability, and usefulness to regular people.

Then, when someone shows us what we really want but were too narrow-minded to ask for, we ridicule it and say it’s too expensive or too small or too big or too limited or too closed or too underpowered or too light or too heavy or too ugly or too stylish. We trash it on our blogs and make fun of the people who wasted their money on it. Six months later, we want one.

Geeks are terrible customers, too. We’re whiny and demanding and entitled and self-important and high-needs, and we’re incredibly fickle. We switch products and services much more frequently, and for much more trivial reasons, than regular people. We have low tolerances, long memories, and little brand loyalty.

The products made by and for geeks can occasionally create profitable businesses, but aren’t likely to ever get mass-market appeal or noticeably change the marketplace, like Android phones or Ogg codecs or desktop Linux or social media cross-posting group dashboard follow feed management service frameworks.

In other words, targeting us is a terrible idea for a consumer products company. It has never been Apple’s business to target us. Therefore, whatever they have up their sleeve this time — if anything — is unlikely to resemble any of our predictions, assumptions, or expectations.

When they do announce their next product, we’re probably going to be disappointed by some seemingly significant aspect of it that turns out to be completely insignificant, and after six months of making fun of it and the people who buy it, we’ll all realize that it’s actually what we really wanted, fall in love with it, and buy one for ourselves.

◆

I’ve made a dramatic shift in my diet over the last few weeks: eating almost no meat. (update: thoughts on fish.)

There are plenty of good reasons not to eat meat, including:

- The treatment of the animals is awful. The more you know about industrialized meat production, the less you want to support it. (And it’s not just for cows. Chickens and turkeys aren’t much better, and pigs are probably the worst.)

- High-volume meat production creates a large environmental burden, usually as a result of having to feed the animals so much and figure out what to do with their waste.

- Meat is more calorie-dense than many alternative foods, and red meat in particular is unhealthy to eat frequently. Non-meat-heavy diets can generally be much healthier.

Michael Pollan’s In Defense of Food makes a great argument for low-meat diets. (You should really read it regardless of your thoughts on meat. Do you eat? Then it’s relevant to you.)

Wait, so are you a vegetarian now?

No.

I’m not big on all-or-nothing obsessiveness. I’m not a recovering hamburger addict who will sink back into meat abuse if I ever have another taste again. All things in moderation.

The problem isn’t eating animals. It’s a lot of people eating a lot of animals. If demand was reduced to 25% or less of its current level, we’d see massive environmental and health improvements. Humane animal treatment is trickier, since you’re still killing and eating them, but it could be improved if less meat was needed and it could command a higher price. For instance, actual free-range (not the bullshit kind) and grass-fed animals would become more practical.

A few weeks ago, I decided to significantly reduce my meat consumption. To start, I went all-vegetarian for one week to force myself to broaden my horizons a bit (especially for office lunches) and try new non-meat options. It worked, and was much easier than I expected.

Now, I’ve lowered my overall meat consumption to approximately these levels that I intend to maintain:

- Chicken or turkey: 1-2 meals per week.

- Beef: 0-1 meal per month.

- Pork: Almost never. Occasionally as a minor ingredient in something else.

With such a severe reduction, I’ll achieve most of the benefits of vegetarianism, but without many of the inconveniences. It’s still ridiculously easy to get good meals at restaurants or while traveling. I don’t even like tofu or giant mushrooms, and it’s still much easier than I expected to avoid meat most of the time and still eat healthy, satisfying, widely available meals.

Try it.

If a lot of people made this change, we could make a big difference on many important fronts.

Do the vegetarian week, then see how little meat you really need to eat. You may be pleasantly surprised at how easy and practical it is.

◆

Steve Jobs quoted this BusinessWeek story in a keynote a few years ago:

Vista, the latest version of the software giant’s Windows operating system, looks like it could turn out to be one of the great missteps in tech history.

But this is the wrong way to interpret Microsoft’s marketshare and mindshare losses to Apple since Windows XP’s release in 2001.

Vista, itself, wasn’t a misstep. Microsoft must keep updating Windows regularly to remain competitive and preserve revenue. It had problems and delays, but the concept was solid and is still defensible even in hindsight.

In 2003, Joel mentioned Microsoft’s impeccable strategy relative to the failed software giants of the 90s such as Lotus, VisiCorp, and Micropro:

Microsoft was the only company on the list that never made a fatal, stupid mistake. Whether this was by dint of superior brainpower or just dumb luck, the biggest mistake Microsoft made was the dancing paperclip. And how bad was that, really? We ridiculed them, shut it off, and went back to using Word, Excel, Outlook, and Internet Explorer every minute of every day. But for every other software company that once had market leadership and saw it go down the drain, you can point to one or two giant blunders that steered the boat into an iceberg.

I think it’s safe to say that Microsoft is hurting now. They’re probably not going out of business in our lifetimes, and they’ll likely continue to dominate many markets for at least another decade or two. But a major shift has occurred between 2001 and today that’s causing some big problems for Microsoft:

- Apple’s converting a lot of former Windows users. There’s still a long way to go, but Apple’s growth (into Microsoft’s market) shows no signs of slowing down.

- Microsoft’s Windows and Office upgrade revenues have been disappointing.

- For the first time in most of Microsoft’s history, they’re repeatedly failing to dominate desirable secondary markets, including portable media players, mobile phones, and web search (actually, every desirable web market). Microsoft’s entry into a market previously meant imminent death of any other players, but now it’s usually a source of comedy for the tech press.

- Many of their core products, including Windows, Windows Server, Office, and Internet Explorer, have been attacked by either strong competition or creeping irrelevance.

- Microsoft’s brand perception among consumers has been heavily damaged. Many more people now associate them with bugs, delays, shoddy products, and failure.

As far as I can tell, none of these are Vista’s fault, specifically. Vista is just a logical continuation of Microsoft’s style and culture. Die-hard Windows fans like it.

The bigger problem is that Microsoft isn’t very good, and I mean that in a big way. I was too young to appreciate their word-processor and spreadsheet battles of the very early 90s, but that’s what Joel typically cites as an example of Microsoft’s excellent strategy and their production of high-quality software. They may have been great back then, but that’s not the Microsoft we know today.

Today’s Microsoft is impulsive and sloppy. It has become massive and complex with too many layers of management, committees, and bureaucracy to produce anything great — the best they can hope for is good, and even that’s rare. Their products are weakly anticipated and receive mediocre reviews. Most importantly, they’re unable to grow their positions in the technology industry’s biggest new markets as their old markets slowly erode. Top leadership seems to have no strategy or direction for the company, and there’s no sign of any problems being meaningfully solved in the foreseeable future.

After Internet Explorer 6’s release in 2001, when Microsoft had crushed all competing browsers, they rested on their laurels for too long. Now, Firefox and Safari are eating their lunch. Microsoft finally scrambled to release IE 7 and 8, but they delivered too little, too late — Internet Explorer’s marketshare will probably dip below 50% within three years. (I’ll bet Jeff Atwood a beer on that.)

This pattern didn’t just happen in web browsers: it applies to the entire company over a longer interval. After effectively destroying most of the competition by the mid-90s, Microsoft got lazy. But then the internet exploded, and Microsoft wasn’t part of it. Soon afterward, Apple got their act together. Linux became a popular server platform. Google dominated web search, advertising, and applications. Microsoft’s being assaulted on nearly every front by companies that are producing much better products, and they can’t catch up.

None of this is Vista’s fault.

Vista has no major failures. Rather, it’s an immense collection of tiny failures: awkward interfaces, hostile behavior, ugly design, and tons of small bugs. They’re the same of tiny failures that plague all of Microsoft’s modern products, which is why I have absolutely no doubt that Windows 7 will suffer the same fate.

Microsoft’s woes aren’t specific failures of strategy or execution: the company culture, structure, inertia, and ethos are so deeply flawed that it can’t recover. Microsoft can never do what Apple and Google are doing today. It’s too broken. Insert your Titanic metaphor of choice.

Joel paraphrased Bill Gates in 2000:

One of the most important things that made Microsoft successful was Bill Gates’ devotion to hiring the best people. If you hire all A people, he said, they’ll also hire A people. But if you hire B people, they’ll hire the C people and then it’s all over.

Bill Gates is a very smart guy — he’s fully aware of the problems that his company now exhibits. Maybe he semi-retired (or whatever he did) because he saw that it’s all over long before we will.

◆

Through a series of coincidences, some lucky positions, a few prominent inbound links, and just pure longevity and endurance, the size of my site’s audience is finally nontrivial. It’s nowhere near the point where putting up awful AdSense ads would generate enough to pay my electric bill, and by any other prominent blogs’ standards, it’s completely insignificant.

But I have enough readers now to cause two problems:

- I can’t express any opinions without being willing to accept a significant negative response. Anything I say could inadvertently end up getting linked from a popular site or mentioned on a popular podcast and I could get hundreds of angry emails and people all over the internet calling me an asshole and distorting my argument into flamebait. (Have you seen what happens when Alex Payne or Joel Spolsky express opinions about programming languages?) And if I say anything about other companies in my field, even lightheartedly or constructively, I’ll get pressure from investors to censor myself. (Even that sentence might get me into trouble.) With an audience, I’m much more accountable for what I say.

- I start feeling obligated to raise the average quality of what I post and stay within the bounds of what people expect me to write about.

I don’t have anything else to say about the first point for now, but the second point is relevant to nearly everyone who has a popular blog.

They feel pressure to make every post a hit while also maintaining a healthy post frequency. And if the frequency drops, the pressure increases to make every post a superstar.

Some people want it this way: they enjoy keeping their standards up and want only the best on their site. But their site is their outlet, and if they still want to publish anything that’s not “good enough” or not “relevant” for their popular site, they make second blogs or turn to Twitter.

But what if a prominent tech blogger wants to write something about scrambled eggs or show off a really great photo of his child that won’t fit in 140 characters and TwitPic? Or what if tech is slow and all of his new post ideas are on other topics for 3 weeks?

Often, this “unfit” content goes unpublished. Or it gets relegated to a secondary publishing outlet with no audience and no context in this person’s life.

To me, that’s a trap. And I refuse to fall into it. I’m still going to feel free to post photos of breakfast and argue about pillowcase assembly even if I get famous and become an A-lister (which I really don’t see happening, but am presenting here for the sake of argument).

I’m not just about technology, just as John Gruber’s not just about Apple products and Merlin Mann isn’t just about index cards and Steve Yegge can speak briefly and Jeff Atwood enjoys Rock Band and Paul Graham is a great cook and Ted Dziuba likes stuff and pretty people take shits and maybe, just maybe, there’s an area of Michael Arrington’s life in which he isn’t a dick.

People aren’t so one-sided. Everyone has a life that goes much deeper than the topics on their blogs.

I never wanted to work for a big company because it increases the likelihood of being pigeonholed, and I don’t want to be “the ______ guy” for any one thing.

I don’t need to be an authority on anything. I don’t need you to agree with my arguments. I know this is probably too long, too broad, and too egotistical for the mass market to read, and you most likely skimmed over it. I wrote this just now, and I’m going to publish it now, even though it’s Sunday and it won’t see peak traffic. I don’t want to write top-list posts 10 times a day. I don’t want to be restricted to my blog’s subject or any advertisers’ target demographic. This site represents me, and I’m random and eccentric and interested in a wide variety of subjects.

I do my own thing. I don’t need you to like it. That’s not why I do it.

But ultimately, I think people do like this sort of thing. Why do you think reality shows are so popular?

As more people start realizing that there are better reasons to write blogs beyond trying to squeeze pennies out of ads, I bet we’ll see a significant movement toward tearing down these barriers. We’ll see more complete people blogging their whole lives, not just trying to emulate magazine columns or news sites. Some of them will get large audiences, but most won’t — and it won’t matter.

◆