Daring Fireball: Open and Shut →

Shit, this is good.

I’m Marco Arment: a programmer, writer, podcaster, geek, and coffee enthusiast.

Shit, this is good.

Cabel Sasser at Panic:

We thought we were going insane. This is just an AV adapter! Why are these things happening! Limited resolution. Lag. MPEG artifacts. Hang on, these are the same things we experience when we stream video from an iOS device to an Apple TV…

Apple’s Lightning Digital AV Adapter apparently packs some serious heat to achieve its mediocre output.

As much as I love the Lightning port as a device owner, this isn’t the best endorsement for the practicality of its design for accessories.

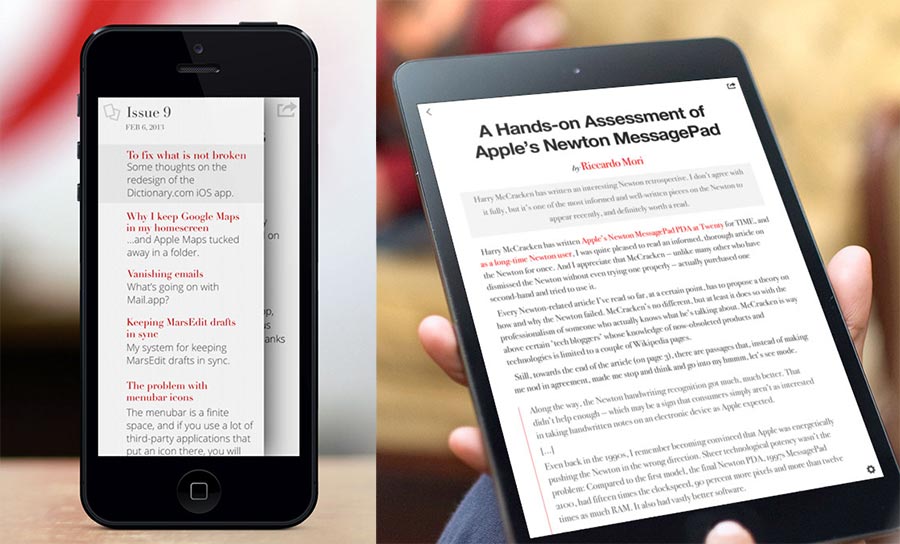

That’s actually the title of the latest newsletter from TypeEngine, a startup promising a platform to easily make Newsstand magazines a lot like mine.

I don’t really know what to think about things like this. Copying design elements and features should be done sparingly, carefully, and from a diverse pool of sources. Even though many elements of The Magazine’s design are simple or commonplace, their combination is unique.

I haven’t used TypeEngine’s app, but from their screenshots and feature descriptions, it looks like they’ve lifted quite a few elements from The Magazine and not many from other places.1

But I worry that TypeEngine, and startups like them, are focusing too much on the wrong side of this problem and filling a lot of people with false hope or misleading expectations. Any competent web developer can build a basic CMS. Any competent iOS developer can download and show HTML pages in an app. These are straightforward platforms to build. A decent developer can code an entire iOS magazine platform from scratch in 3–6 months. Granted, not everyone is a developer or can afford one, but the recent demand (and impending oversupply) of platforms that enable mass creation of The Magazine-like publications indicates a lack of understanding of the market.

The Magazine isn’t successful because I have red links, centered sans-serif headlines, footnote popovers, link previews, and a white table-of-contents sidebar that slides over the article from the left with a big shadow even on iPhone. It isn’t successful because authors write in Markdown, the CMS gracefully supports multi-user editing, we preview issues right on our devices as we assemble them, and any edits we make after publication are quickly and quietly patched into the issue right as people are reading it. Very little of this matters.

It’s succeeding because Glenn, the authors, the illustrators, the photographers, and I pour a lot of time and money into the content, relentlessly publishing roughly two original illustrations, four photos, and 10,000 polished words every two weeks.

If you want to succeed by copying something from The Magazine, copy that.

The app is secondary — it’s just a container. I’m not going to get a meaningful number of new subscribers because I add a new setting or theme. This is why publishers like Condé Nast can have such mediocre, reader-hostile apps: the apps don’t matter as much as we like to think. The content and the audience matter much more than what color your links are.

And there aren’t any startups promising a turn-key supply of great content that attracts enough paying subscribers to fund it. You’re on your own for that.

If they’re really interested in reducing cloning accusations with the argument that they’re just making a platform that other people can use to potentially make a clone of The Magazine, they should at least use a more distinct example theme in their screenshots.

I try to give new products similar to mine the benefit of the doubt — I really don’t want this to just be a clone — but decisions like this worry me. I’m seeing a lot of red flags with TypeEngine. ↩︎

Pretty big:

In our security investigation, we have found no evidence that any of the content you store in Evernote was accessed, changed or lost. We also have no evidence that any payment information for Evernote Premium or Evernote Business customers was accessed.

The investigation has shown, however, that the individual(s) responsible were able to gain access to Evernote user information, which includes usernames, email addresses associated with Evernote accounts and encrypted passwords. Even though this information was accessed, the passwords stored by Evernote are protected by one-way encryption. (In technical terms, they are hashed and salted.)

[…] in an abundance of caution, we are requiring all users to reset their Evernote account passwords. Please create a new password by signing into your account on evernote.com.

Evernote has strong technical talent, so this is noteworthy and probably not the result of simple negligence on their part. Good for them for being so honest and direct in the explanation.

(Their site is being hammered right now, so if you can’t get it from them, here’s Instapaper’s cache.)

A few people have sent me this story about a “coffee-powered car”, which is a great headline but isn’t actually true:

Instead of the brewed beverage, the vehicle is fueled by pellets made from the chaff that comes off of coffee beans during the roasting process, which is then heated and broken down into carbon monoxide and hydrogen, the latter of which is cooled, filtered and combusted in an internal combustion engine.

The process is called gasification and works with just about any carbon-based substance.

Indeed, the car is basically powered by burning wood pellets, and packed coffee chaff can be substituted for wood. So how much chaff does it need?

The creator of the vehicle, Martin Bacon, tells FoxNews.com that the vehicle can travel about 55 miles on a 22-pound bag of pellets, which in wood form costs about $2.50.

This would be interesting, but chaff is nearly weightless. It looks like a lot of volume, but even the slightest air current blows it away. How much coffee do you need to roast to get 22 pounds of chaff?

Here’s most of the chaff from my roast this morning. Some burns off during roasting, and a few flakes come out with the beans, but this is the majority of it, taken from the roaster’s chaff tray:

It’s not enough to register my scale’s minimum measurement of 1 gram of chaff for this half-pound roast.

I don’t know how much chaff a big shop roaster leaves behind after a big roast. (I can’t find this information anywhere, but I’d love to hear from a pro roaster who knows.) Maybe they can get more.

If we generously assume that they can yield 2 grams of chaff per roasted pound, and that chaff pellets produce the same amount of power by weight as wood pellets, they’d need to roast about 5,000 pounds of coffee to produce the 22 pounds of chaff needed to power this car for 55 miles. And that’s not including the energy required to collect all of this chaff and pack it into pellets.

It’s pretty far removed from what most people would assume you mean by a “coffee-powered car”.

Hamish McKenzie at PandoDaily responded to my TypeEngine post with this inflammatory headline, insulting The Magazine’s content in the article (for no apparent reason) and mischaracterizing my argument so I sound even more arrogant and out-of-touch than usual:

Arment is right about the importance of quality editorial in attracting and maintaining a readership, but his argument about platforms is surprisingly out of touch.

My post was sloppy — I should have slept on it and rewritten a lot of it the next morning.1 I was making two points that probably should have been two posts. First, TypeEngine showed poor judgment in choosing their example template. This was the meat of the second and bigger point:

But I worry that TypeEngine, and startups like them, are focusing too much on the wrong side of this problem and filling a lot of people with false hope or misleading expectations. … Granted, not everyone is a developer or can afford one, but the recent demand (and impending oversupply) of platforms that enable mass creation of The Magazine-like publications indicates a lack of understanding of the market.

I never said that platforms like 29th Street Publishing and TypeEngine shouldn’t exist. We could use some great ones, and there will be a lot of duds, just like blogging engines. I should have reinforced that point.

What I wanted (but failed) to express was that I think a lot of people assume that the tools are the biggest thing holding them back from publishing a successful Newsstand magazine, and that once these platforms are available, they’ll just copy their blog posts into a magazine and start printing money.

In reality, I think it’s going to be a microcosm of the entire App Store: a few winners and a lot of unprofitable efforts.

The Magazine isn’t a Newsstand publication just for fun — it’s a Newsstand publication because I wanted to fund magazine-quality content without ads, and iOS makes payments easy enough to make that possible. There’s a reason it’s something else and not just Marco.org Magazine, and even though I now have a platform I could use for free, I’m still not making Marco.org Magazine.

Publishing platforms will soon make it easier to get into Newsstand. But making magazine-quality content on a regular schedule, getting enough subscribers to pay when there’s tons of great online content for free, and keeping the subscribers interested after they’ve paid — those are hard, they’ll always be hard, and a lot of people are underestimating those challenges and thinking the biggest barrier is an app.

A good magazine’s editor would have made me revise it, but single-author blogs don’t usually have editors — this is one of the factors that can distinguish good magazine writing from most blog writing. ↩︎

Crashlytics addresses a huge hole in mobile app development: deep insights into an app’s performance to pinpoint and fix issues quickly and easily. Built by a hardcore team, Crashlytics is the most powerful, yet lightest weight crash reporting solution. We find the needle in the haystack, even the exact line of code that your app crashed on, so you can quickly scan and trace an issue. Crashlytics has been deployed in hundreds of millions of devices and powers thousands of today’s top applications, including Twitter, Square, Yammer, Yelp, and GroupMe.

Want to experience the magic? Sign up today.

Crashlytics is completely free. Seriously. Our goal is to provide enterprise-grade performance monitoring to everyone! Enjoy it on us.

Thanks to Crashlytics for sponsoring the Marco.org RSS feed again this week. I recently started using Crashlytics in The Magazine’s app, and I’m blown away by how good it is.

Cree released three bulbs, and the $10 one is a 40-watt equivalent. The Verge actually got their “hands on” the brighter, $13 model. (I have no explanation for their headline.)

- $12.97 for a “warm white” 60-watt equivalent, providing 800 lumens of light for 9.5W of electricity, at a warm color temperature of 2,700K

I haven’t seen these bulbs, but if they’re not complete garbage (and Cree usually isn’t), that’s a great price.

But after my extensive tests, I’ve already filled every light socket in my house that can accept an LED comfortably with today’s technology.

The market already has excellent 40- and 60-watt-equivalent LED bulbs. These are cheaper, which is noteworthy, but they don’t appear better.

LED bulbs need to get cheaper, brighter, more diffuse, smaller and lighter (so they’ll fit in more fixtures designed for incandescents), and cooler-running (so they can live longer in enclosed fixtures), roughly in that order.

The manufacturers are addressing the first one, and that’s great. But it’s hard to care about endless 60-watt choices when most households have a lot of bulbs that LEDs still can’t replace at any price.1

For years, geeks have wanted Apple to make an “xMac”: an expandable desktop tower like the Mac Pro, but much cheaper, generally achieved in theory by using consumer-class CPUs and motherboards instead of Intel’s expensive, server-grade Xeon line. Apple’s refusal to release such a product is almost single-handedly responsible for the Hackintosh community.1

Apple has shown that they don’t want to address this market, presumably because the margins are thin. And the demand probably isn’t as strong as geeks like to think: most businesses buy Windows PCs for their employees, and most consumers buy laptops for themselves. The relatively small group of people who still want desktop Macs seems to be served adequately by the iMac and Mac Mini.

With Apple now letting the Mac Pro stagnate without an update since August 2010, while the rest of the Mac line has seen multiple new generations and huge improvements since then, it seems like this just isn’t a segment that they care about anymore. The Mac Pro stagnation and the improvements in the other lines have pushed many former Mac Pro owners to the new iMacs and Retina MacBook Pros, which are so fast that they can compete with 2010’s Mac Pros.

The Mac Pro is all but forgotten now, but Dan Frakes restarted the discussion of the xMac this week, arguing for the next Mac Pro to be a consumer minitower:

Put all this together—Apple’s relentless efforts over the past few years to make everything smaller, cooler, and less power-hungry; the fact that you don’t need massive components to get good performance; and an apparent trend towards conceding the highest-end market—and it seems like a Mac minitower is a logical next step for the Mac Pro line.

I don’t think it’ll happen like this.

The Mac Pro has three problems:

Back in 2011, I wrote Scaling down the Mac Pro. Most of it still applies: most ways to significantly reduce the Mac Pro’s cost or size would make it far less attractive to the people who do still buy it.

My conclusion in 2011:

It’s impossible to significantly change the Mac Pro without removing most of its need to exist.

But I think it’s clear, especially looking at Thunderbolt’s development recently, that Apple is in the middle of a transition away from needing the Mac Pro.

Fewer customers will choose Mac Pros as time goes on. Once that level drops below Apple’s threshold for viability or needing to care, the line will be discontinued.

I bet that time will be about two years from now: enough time for Apple to release one more generation with Thunderbolt and the new Sandy Bridge-based Xeon E5 CPUs in early 2012, giving the Mac Pro a full lifecycle to become even more irrelevant before they’re quietly removed from sale.

A few power users will complain, but most won’t care: by that time, most former Mac Pro customers will have already switched away.

It’s turning out to be even more brutal than I expected: Apple didn’t even bother with a Xeon E5 update.2 It looks like they’re accelerating the Mac Pro’s demise by neglect, not preparing for its recovery.

There’s even less of a reason to buy the Mac Pro today than I expected. With Fusion Drive, an iMac can have 3 TB of storage that’s as fast as an SSD most of the time (for most use, including mine3) without any external drives. And the recent CPUs in the iMac and MacBook Pro are extremely competitive with the Mac Pro at a much lower cost.4

Coincidentally, the Mac Pro discontinuation date I predicted as two years from that post will be this November: that’s roughly when Tim Cook hinted that we should expect to see “something really great” for “pro customers” to address Mac Pro demand.

I really want that to be a great new Mac Pro, but I can’t deny that both Apple and the market are sending strong signals suggesting otherwise.

Desktop Retina displays are a wildcard. We can already observe from the Retina MacBook Pros that high-density LCD panels appear to be nearly ready for desktop sizes, but GPUs and video-cable interfaces (e.g. Thunderbolt) will be severely limiting factors. It’s possible that bringing Retina density to desktop-size displays without sucking will require the Mac Pro’s margins, thermal and power capacities, and horsepower for the first few years.

But Apple is patient, and building a Retina iMac might be significantly easier since it avoids the high-speed-video-cable problem. With Fusion Drive significantly improving disk speeds, high-end Thunderbolt A/V peripherals finally starting to appear, and so many pros already using Retina MacBook Pros and iMacs today, a Retina iMac would be a strong temptation for current Mac Pro users.

Maybe Cook’s “something really great for pro customers” is the Retina iMac. If so, as much as I hate to say it as a fan of expandable towers, that’s probably enough.

A lot of people, my past self included, also just want to build their own towers from individually purchased parts, which Apple will probably never support. But I bet Apple could win a lot of them over by just shipping an “xMac” for a reasonable price. ↩︎

A few high-end users have built Xeon E5 Hackintoshes with strong results compared to current Mac Pros. ↩︎

I’ve been using Fusion Drive in my Mac Pro for almost a month and I’m very pleased. In practice, it actually feels faster overall than the separate SSD and HDD setup did, because the SSD is finally being intelligently used for my working files even when they’re so large that I previously kept them on the HDD.

I now have 4 TB of space performing at SSD speeds most of the time, at a very reasonable cost, using only two drives. ↩︎

Today, you can get a 27” iMac that’s competitive with or faster than most sensible 2010 Mac Pro configurations (3.4 GHz quad-core Ivy Bridge, 16 GB RAM, 3 TB Fusion Drive, GTX 680MX) for $2,949.

The most sensible, comparable Mac Pro to get is probably the 3.33 GHz 6-core with 16 GB RAM, 2 TB hard drive (no small SSDs are available to fuse, but you could get an aftermarket one), the ancient Radeon HD 5870, and a 27” LED Cinema Display (not even the Thunderbolt Display, which isn’t compatible because there are still no Thunderbolt ports on the Mac Pro). It would be slightly faster than the iMac in the most CPU-bound roles, but substantially slower in many other tasks. It costs $4,673. ↩︎

In this week’s casual car podcast: Top Gear metaphors, why John Siracusa likes Hondas so much, visibility, seating ergonomics, boring Ferrari stories, ancient Volvos, and LED daytime running lights as eyeliner.

Sponsored by Squarespace: Use code NEUTRAL3 at checkout for 10% off.

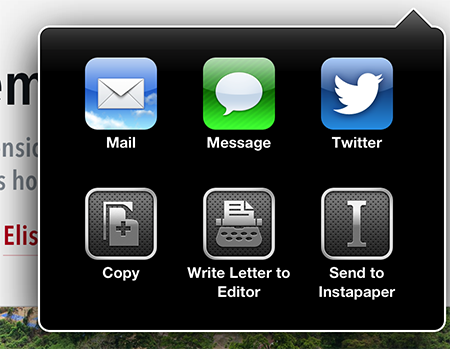

Now you can write us letters from any article, and we might publish them in a future issue. This update also adds Gmail and Sparrow support and some big bugfixes.

Remember, readers of shared articles now see full text (for a limited number of articles per month). Please share your favorite articles to help spread The Magazine. Thanks!

https://marco.org/2013/03/09/hypercritical-mac-pro-successor

John Siracusa:

The Mac Pro is Apple’s halo car. It’s a chance for Apple to make the fastest, most powerful computer it can, besting its own past efforts and the efforts of its competitors, year after year. This is Apple’s space program, its moonshot. It’s a venue for new technologies to be explored.

Very good points.

I’m still hoping for the Mac Pro to continue, but it’s also easy to see why Apple would be justified in discontinuing it.

It’s also important to keep in mind that while there hasn’t been a real Mac Pro update since August 2010, Intel didn’t deliver the successor to the 2010 Mac Pro’s CPUs, the Xeon E5 series, in volume until spring 2012, after significant delays.1 It’s only really been one year since Apple could have released new Mac Pros. And architecturally, there isn’t a good way to offer Thunderbolt on Xeon E5 platforms.

Apple may intend to keep the Mac Pro for a long time. Intel’s CPU delays, the lack of a good Thunderbolt implementation, and the relative unimportance of the Mac Pro might have just convinced them to skip the Sandy Bridge-based Xeon E5 generation and wait until the Ivy Bridge-EP chips in Q3 2013 (lining up with Cook’s “later next year”).

Until we get closure on this Mac Pro generation — discontinuation or an update — we won’t know why it’s been stagnating for so long. (Unless someone gets another email from Tim Cook.)

Too many people think that Apple can just release new models whenever they feel like it, and whenever there isn’t an update when they want one, Apple is just withholding it arbitrarily. But there are usually very good reasons, and that reason is often simply waiting for Intel to deliver the next generation of processors for a given Mac family.

The Mac Pro, until this delay, has always been very easy to predict: Apple releases a new one when Intel releases a new generation of Xeon CPUs to put into it, which happens about every 12–18 months, and the schedule is usually published at least a year in advance.

Until there’s a new line of Xeons, it’s not worth updating the Mac Pro. (This greatly annoys gamers, who make fun of the Mac Pro’s video cards when they become relatively ancient midway through each Mac Pro release cycle, but Apple doesn’t need to care because no gamers buy Mac Pros except John Siracusa.)

What makes the current delay unusual (and worrisome) is that, for the first time, there’s a great new Xeon family available and Apple decided to skip it. ↩︎

Daniel Eran Dilger:

Almost three years after Google released its WebM video encoding technology as a “free” and open alternative to the existing H.264 backed by Apple and others, it has admitted its position was wrong and that it would pay to license the patents WebM infringes.

Looks like we’re establishing a clear pattern: Google clearly (and often willfully) infringes on someone else’s IP, can’t believe that it’ll ever have any repercussions, and claims they’re doing it to be “open” or some bullshit. It betrays a culture at Google’s highest levels of arrogance, entitlement, and dishonesty.

“Open” has very little to do with anything they do. What they’re really doing most of the time is trying to gain control of the web for themselves and their products. If they really cared about being so “open”, they’d open up a nontrivial part of their business that hasn’t already been commoditized, like their searching or advertising algorithms.

As usual, “open” is just lip service. And it works. It works damn well. You wouldn’t believe the amount of nasty feedback I’m going to get for writing this from people who think Google is contributing, out of the goodness of its heart, to the grand benevolent technical cause of whatever “open” means to each of them as they happily hand over more and more of their privacy and data to the very closed vaults of the world’s biggest advertising company.

https://marco.org/2013/03/10/tweaked-apple-tv-contains-die-shrunk-a5

Turns out that the new Apple TV CPU isn’t a die-shrunk A5X as AnandTech predicted, which throws a wrench in my theory that it would be a trial production run preceding future use in a Retina iPad Mini.

Mac Rumors got their hands on the newly revised Apple TV:

Most notably, the tweaked third-generation Apple TV does not contain an A5X chip. Instead, it contains an A5 chip like its predecessor, although the new chip is considerably smaller than the previous one.

When we thought it was an A5X, it was quite interesting, since there’s not much reason for an Apple TV to use an A5X. It was easy to draw the Retina iPad Mini conclusion, since not much else made sense.

Now that we know it’s most likely the same CPU as before but die-shrunk again, it’s much less interesting: it could be a trial run of TSMC’s fabs to move more of Apple’s component business away from Samsung, it could be a 28nm-process test preceding large-scale use in a future “A7” CPU, it could be gearing up to be used in a cheaper iPhone or iPad Mini, or it could just be a way to get a bit more profit and battery life from silent revisions of existing products.

We can still reasonably assume that the Apple TV doesn’t justify custom processors on its own, but since the A5 is used in multiple products in mostly uninteresting ways, we can no longer infer anything more interesting than a manufacturing detail — like an upcoming Retina iPad Mini — from this.

Dr. Drang:

I like Daylight Saving Time, and the advantages it brings more than make up for the slight disruption in my schedule. In fact, the most annoying thing to me about the DST changeovers is hearing people complain about them.

I thought DST was dumb until I read this.

A new tech podcast that Casey Liss, John Siracusa, and I accidentally created.

We’d always talk tech after recording each episode of Neutral, because we’re three nerds and we can’t help ourselves, and now it’s a podcast.

People keep asking us this, since my Build and Analyze and John Siracusa’s Hypercritical were both 5by5 tech podcasts, and now we’ve started a new tech podcast.

There really isn’t an interesting story behind it.

I want to own everything I do professionally unless there’s a very compelling reason not to. Some people feel uneasy having that level of control, but I feel uneasy not having it (to a fault).

When I started Build and Analyze, there were many compelling reasons to yield control and ownership to Dan: I needed a host, I didn’t know how to edit podcasts well or make them sound good, I didn’t have as much of an audience on my own, I couldn’t sell sponsorships myself, and I couldn’t devote much time to it. As far as I know, John was in a similar situation, although I can’t speak for him. That was more than two years ago, and we both ended our 5by5 shows last year for unrelated reasons.

Now, I know how to host, edit, promote, and sell a podcast without putting an insane amount of time into it. Since I’m willing to do that, John and Casey don’t need to, so it’s easy on them.

I’m not specifically avoiding 5by5 — I just don’t think we need what other people’s podcast networks provide. Maybe that will change over time, but I don’t expect it to.

Crashlytics addresses a huge hole in mobile app development: deep insights into an app’s performance to pinpoint and fix issues quickly and easily. Built by a hardcore team, Crashlytics is the most powerful, yet lightest weight crash reporting solution. We find the needle in the haystack, even the exact line of code that your app crashed on, so you can quickly scan and trace an issue. Crashlytics has been deployed in hundreds of millions of devices and powers thousands of today’s top applications, including Twitter, Square, Yammer, Yelp, and GroupMe.

Want to experience the magic? Sign up today.

Crashlytics is completely free. Seriously. Our goal is to provide enterprise-grade performance monitoring to everyone! Enjoy it on us.

Thanks to Crashlytics for sponsoring the Marco.org RSS feed again this week. It helped me identify multiple crashing bugs in The Magazine 1.2.1 that never showed up in my testing, and I was able to ship a bugfix 1.2.2 update within days.

Google fans keep sending me this as if it rebuts my Google/WebM post, but I don’t think it does.

My positions were that Google often infringes others’ IP intentionally with an assumption that they can get away with it (which is usually true), that WebM was one such instance where they assured us that it was patent-free when in fact it wasn’t, and that they cultivate an image of being “open” while actually only using “openness” in noncritical or unprofitable parts of their business.1

This post contradicts none of that, and instead is about the details of Google’s patent-licensing deal. Whether they’re negotiating royalty-free use of WebM now with patent holders doesn’t change the fact that they denied and willfully ignored its patent liabilities until now.

They also use “open” as a marketing trick against established competitors they’re trying to disrupt: by labeling their product as the “open” platform, they make their competitors look closed: evil, greedy, and out of touch. Sometimes it works to some degree, like Android. And sometimes it fails miserably, like OpenSocial. ↩︎

Based on their live, on-air discussion afterward, the biggest gains for each were 5by5 getting some great new shows and 70Decibels getting 5by5’s faster hosting, more robust platform, and larger ad-sales resources.

Congratulations to both.

Google Reader is a convenient way to sync between our RSS clients today, but back when it was launched in 2005 (before iPhones), it destroyed the market for desktop RSS clients. Client innovation completely stopped for a few years until iOS made it a market again — but every major iOS RSS client is still dependent on Google Reader for feed crawling and sync.

Now, we’ll be forced to fill the hole that Reader will leave behind, and there’s no immediately obvious alternative. We’re finally likely to see substantial innovation and competition in RSS desktop apps and sync platforms for the first time in almost a decade.

It may suck in the interim before great alternatives mature and become widely supported, but in the long run, trust me: this is excellent news.

It’s not a die shrink, and it’s still manufactured by Samsung. But it’s single-core:

If we compare this with the earlier 32-nm APL2498 part, it looks as though we now have a single-core ARM A9 CPU, together with a dual-core GPU as in the APL2498. The Apple TV has always been 1-core, but the APL2498 had one core disabled – maybe Apple now thinks that sales will be enough to justify a dedicated part, or maybe we are going to see another single-core device in a different product line.

To get the almost 50% die size reduction that we have, though, there has to be more than removal of one core – other changes have been made. As yet we haven’t quantified them.

This is getting interesting again.

Knowing Apple, this revised, single-core A5 wasn’t made only for the Apple TV. The big question, then: Where else might Apple want to use a small, cheap, single-core A5?

I’d guess it’s still too big and power-hungry for a watch, but if they want to shave component costs down for a low-cost iPhone or an even cheaper iPad Mini, this could be a good CPU candidate.

Daniel Jalkut:

By implementing a suitable syncing API for RSS, and implementing a reasonably useful web interface, Black Pixel could establish NetNewsWire Cloud as the de facto replacement for Google Reader.

I’d love to see that.

In this episode of our car show, what optional equipment should you get or avoid? We debate navigation, sunroofs, parking assistance, premium stereos, and more. We also follow up on octane myths and the 3-series GT.

Sponsored by Squarespace: Use code NEUTRAL3 at checkout for 10% off.

https://marco.org/2013/03/14/baby-steps-replacing-google-reader

With Google Reader’s impending shutdown, lots of new feed-sync services and self-hostable projects will be popping up.

Nearly every mobile and desktop RSS client syncs with Google Reader today, often as the only option. Getting widespread client support for any other service will be difficult since it’s probably going to be a while before there’s a clear “winner” to switch to.

The last thing we need is a format war — with Reader’s shutdown in July, we don’t have time for one.

An obvious idea that many have proposed (or already implemented) is to make a new service mirror the (never-officially-documented) Google Reader API. Even if it also offers its own standalone API for more functionality, any candidates to replace Google Reader should mirror the fundamentals of its API.

Clients then only need to change two simple things:

www.google.com for now. Once popular services emerge, they can add optional presets, but for now, this is all we need.Then use that as the hostname for all API requests: https://{hostname}/reader/api/0/...

Like it or not, the Google Reader API is the feed-sync “standard” today. Until this business shakes out, which could take years (and might never happen), this is the best way forward.

We need to start simple. We don’t have much time. And if we don’t do it this way, the likely alternative is that a few major clients will make their own custom sync solutions that won’t work with any other company’s clients, which won’t bring them nearly as much value as it will remove from their users.

Let’s get Reeder and NetNewsWire to support the Hostname field ASAP so we can build alternative services to back them. Once they support it, other clients will need to follow from the competitive pressure of checklist feature comparisons. The result will be the easiest, fastest solution for everyone and a healthier ecosystem for feed-sync platforms.

What do you think, Silvio Rizzi and Black Pixel?

I don’t browse Hacker News regularly because too few of its posts interest me. But my posts often rank well there and gather a lot of comments, then various Twitter bots start showing up in my saved search for Marco.org links, so I usually end up reading the comment threads on my posts.

I probably shouldn’t.

It’s remarkable (and quite sad) how so many commenters there get so angry and have so much hatred toward people who write opinions they disagree with about electronics. But this epic rant is probably the most unintentionally amusing, ridiculous one I’ve seen against me to date, by someone I’ve never met, in response to my boring, uncontroversial Google Reader API post:

davidpayne11:

Please, as an advice to general HN posters, please avoid posting marco.org links here on HN, because his sole intention is to sell his readers out more than focusing on writing. That’s a fairly grande accusation, but it’s justified.Do you know why is he writing about Google Reader now? Go to your HN homepage right now, as of writing this comment, the Google reader announcement has about 1700 upvotes. Ouch, that’s a lot of views for someone to let go of. Hence, if someone writes something that compliments this announcement, common sense tells me that they would get more page views.

There’s nothing wrong in having ads on your blog/website, people do it all the time. What’s wrong is trying to create an impression to your readers that your sole intention is to write quality content, while you care just about pageviews. Please, realize that marco.org is no different from Techcrunch!

Marco isn’t innocent, if you’ve been following him closely. Also, I think it would help if you take a look at this page where he just blatantly sells us, his readers like some piece of junk commodity. https://marco.org/sponsorship

Wow.

https://marco.org/2013/03/14/has-brent-simmons-posted-this-often-ever-questionmark

Brent Simmons:

This is elegance. It derives from the design of the internet and the web and its many open standards — designed so that no entity can control it, so that it survives stupidity and greed when it appears.

Great piece.

RSS isn’t “dead”. Not even close. We’ve just come up with a lot of alternatives to traditional RSS readers in the last few years.

This week: Google Reader shutting down, the RSS market and client architecture, demand for Google I/O and WWDC tickets, Apple pessimism, and diversifying the iPhone line. I think this is our best episode yet.

We’re jumping the gun a bit since episode 4 was just released on Monday, but to reduce lag between recording and publishing, we’re now going to publish on Fridays. This is like the podcast version of getting an extra hour of sleep for the fall DST change: you get two episodes this week.

Anand Lal Shimpi, after seeing the new single-core A5 and measuring that its Apple TV draws much less total power:

The implications of this smaller, lower power A5 SoC are unclear to me at this point. It seems to me that the Apple TV now sells well enough to warrant the creation of its own SoC, rather than using a handmedown from the iPad/iPhone lineup. The only question that remains is whether or not we’ll see this unique A5 revision appear in any other devices. There’s not a whole lot of room for a single-core Cortex A9 in Apple’s existing product lineup, so I’m encouraged to believe that this part is exclusively for the Apple TV.

I’m still torn on this. I bet that if Apple engineers a whole new device to be the “cheaper iPhone” rather than continuing to sell old models, or if they do a similar move for a future cheaper iPad Mini, this would be an obvious CPU candidate: not only is it tiny, cheap, and fast enough for an iPhone or iPad while leaving a big enough performance gap to drive higher-end buyers to the higher-priced products, but the low power requirements would let Apple shrink the battery a bit, further reducing weight and cost. Every cent matters at Apple’s scale.

But the fundamental assumption I made when this CPU was first rumored — that the Apple TV sold too few units to justify its own custom CPU — was probably wrong, leaving a very boring, plausible explanation for this new CPU that has nothing to do with future iPhones or iPads.

Crashlytics addresses a huge hole in mobile app development: deep insights into an app’s performance to pinpoint and fix issues quickly and easily. Built by a hardcore team, Crashlytics is the most powerful, yet lightest weight crash reporting solution. We find the needle in the haystack, even the exact line of code that your app crashed on, so you can quickly scan and trace an issue. Crashlytics has been deployed in hundreds of millions of devices and powers thousands of today’s top applications, including Twitter, Square, Yammer, Yelp, and GroupMe.

Want to experience the magic? Sign up today.

Crashlytics is completely free. Seriously. Our goal is to provide enterprise-grade performance monitoring to everyone! Enjoy it on us.

Thanks to Crashlytics for sponsoring the Marco.org RSS feed again this week. Crashlytics has become a crucial part of my work. Highly recommended.

Google Reader’s upcoming shutdown and Mailbox’s rapid acquisition have reignited the discussion of free vs. paid services and whether people should pay for products they love to keep them running sustainably.

But users aren’t the problem. As Michael Jurewitz wrote, many tech startups never even attempt to reach profitability before they’re acquired or shut down. Nobody ever had a chance to pay for Google Reader or Mailbox.1

When a free option is available in a market of paid alternatives, far more people will choose the free product, often by an order of magnitude or more. Asking people to pay unnecessarily is asking them to behave irrationally and against their own immediate best interests, even if it’s probably worse long-term. (This behavior affects far more than the tech industry.) And when the free product is better in some ways, which is often the case when a tech giant or well-funded startup enters a market previously occupied only by small and sustainable businesses, the others don’t stand a chance.

The risks are very low for new consumer tech startups because everything required to start one is relatively cheap and can often be ready to launch within a few months of starting development. If a product grows huge quickly, which almost always requires it to be free, it will probably be acquired for a lot of money. Free products that don’t grow quickly enough can usually die with an “acquihire”, which lets everyone save face and ensures that the investors get something out of the deal. Investors make so much from big acquisitions that they can afford to lose a lot of other unsuccessful products in the meantime.

So even in the worst cases, free products don’t usually end too badly. Well, unless you’re a user, or one of the alternatives that gets crushed along the way. But everyone who funds and builds a free product usually comes out of it pretty well, especially if they don’t care what happens to their users.

Free is so prevalent in our industry not because everyone’s irresponsible, but because it works.

In other industries, this is called predatory pricing, and many forms of it are illegal because they’re so destructive to healthy businesses and the welfare of an economy. But the tech industry is far less regulated, younger, and faster-moving than most industries. We celebrate our ability to do things that are illegal or economically infeasible in other markets with productive-sounding words like “disruption”.

Much of our rapid progress wouldn’t have happened if we had to play by the rest of the world’s rules, and I think we’re better off overall the way it is. But like any regulation (or lack thereof), it’s a double-edged sword. Our industry is prone to many common failures of unregulated capitalism, with the added instability of extremely low barriers to entry and near-zero cost per user in many cases.

If you try to play by the traditional rules and regulations, you run the risk of getting steamrolled by someone who’s perfectly willing to ignore them. Usually, that’s the biggest potential failure of the tech world’s crazy economy, which sucks for you but doesn’t matter much to everyone else. But sometimes, just like unregulated capitalism, it fails in ways that suck for everyone.

Google Reader dominated the feed-reading and feed-sync markets so much that almost no alternatives exist, and the few alternatives are very unpopular. Reader is effectively a monoculture, if not a monopoly. And it isn’t just one popular provider of an open standard — it’s a proprietary service that takes a lot of work for anyone else to replicate (as many people are going to learn over the next few months).

While the constant churn of young free products doesn’t usually do much harm, proprietary monocultures are extremely vulnerable to outcomes that suck for everyone. And a proprietary monoculture that’s unprofitable, shrinking in popularity, or strategically inconvenient for its owner is far worse. It’s likely to disappear at any moment, and there may not be anything to fill the gap for months or years, if ever. For all of the people who use RSS every day, as part of their jobs or hobbies, imagine the disruption to their lives if Google had shut down Reader immediately without notice. (Now think of how far off July isn’t.)

If Reader was just a free, popular host running open-source software, anyone could just set up the same services on any web host and migrate their data without much disruption. But it’s not, so it’s going to be a lot more work and much more disruption to our workflows and habits in the meantime.

And we lucked out with Reader — imagine how much worse it would be if website owners weren’t publishing open RSS feeds for anyone to fetch and process, but were instead posting each item to a proprietary Google API. We’d have almost no chance of building a successful alternative.

That’s Twitter, Facebook, and Google+. (Does the shutdown make more sense now?)

The best thing we can do isn’t necessarily to try to pay for everything, which is unrealistic and often not an option. Our best option is to avoid supporting and using proprietary monocultures.

Charging money for something also doesn’t guarantee profitability. Consider Sparrow. ↩︎

https://marco.org/2013/03/19/why-android-updates-are-so-slow

Good explanation of the process, but I think it gives too many of the involved parties a pass on their sluggish behavior. It seemingly asked the subjects, “How long do you usually take to do each of these steps?”, rather than “How can you make this faster?”

Dismissing Apple’s faster update speed with iOS as an “illusion” doesn’t hold up to scrutiny. Apple has released four iOS updates to all still-supported devices in the last two months, with one bugfix update (6.1.1) appearing just nine days after the previous update. Apple has a much smaller pool of devices to test, but that can’t be the only thing they’re doing differently and the only way the Android ecosystem could improve this fairly severe problem.

A better explanation of Android’s slow update speed is buried in the article, but not given enough credence: Google doesn’t do everyone’s testing, so it’s left to the manufacturers and carriers, who just don’t give a shit about updating your year-old phone.

Consumers aren’t completely powerless, though. Almost every phone on the market today can be rooted.

The Android way: Our problems become your problems.

This has been circulating again over the last few days:

As it turned out, sharing was not broken. Sharing was working fine and dandy, Google just wasn’t part of it. People were sharing all around us and seemed quite happy. A user exodus from Facebook never materialized. I couldn’t even get my own teenage daughter to look at Google+ twice, “social isn’t a product,” she told me after I gave her a demo, “social is people and the people are on Facebook.”

It’s a year old, but it appears from the outside that it’s still probably accurate.

Dan Frommer wrote some interesting speculation about Android and called out some of its failings, but I disagree that this part represents Android failing to meet its goals:

Android has done little to radically disrupt the mobile industry. The majority of power still belongs to the same telecom operators that ruled five years ago, and many of the same handset/component makers. Google has helped Samsung boringly ascend and has accelerated decline at Nokia and BlackBerry. It has perhaps stopped Apple from selling as many phones as it might in an Android-free world, and has helped prevent Microsoft from gaining a solid foothold in mobile. …

It’s true that the carrier model hasn’t been disrupted, and in fact, Android has helped strengthen it (and build an uncomfortably close, “open”-internet-hostile relationship between Google and Verizon Wireless).

But back when the first iPhone was making a huge splash, when Google started taking Android seriously and quickly pivoted it from a BlackBerry ripoff into an iOS ripoff, I don’t think they intended to disrupt the established carrier or retail models.

I think it was a defensive move. Mainstream computing and internet usage were clearly moving away from PCs and toward mobile devices, and Google saw a potential future where their core business — web search with their ads — could be wiped out by a single Bing-exclusive deal on a dominant, locked-down platform.1

Google needs Android to have substantial market share to prevent any other platform vendor from ever locking Google’s most important services out of a major part of mainstream computing. It’s the same reason Chrome and Chrome OS exist. In an industry dominated by vertically integrated control freaks, everyone must become one to ensure that nobody else can lock them out.

The flaw with the Android strategy is that, in practice, it doesn’t prevent powerful manufacturers from forking it and cutting Google out. Google probably assumed that no single manufacturer would ever get powerful enough to create their own alternatives to Google’s integrated apps, store, and services, and that Android would be deployed much like Windows on PCs: largely unmodified, with manufacturers all shipping almost the same software and differentiating themselves only in hardware. But as we’ve seen already with Amazon and the Kindle Fire platform (and maybe soon, the powerful Samsung Galaxy line), that’s not a safe assumption anymore.

So I agree with Frommer’s conclusions: maybe big changes are on the horizon at Google to fix this. Old Google, in 2007, frequently toyed around with “open” projects that might not lead anywhere useful for the overall business. Old Google made Android.

New Google is much more strategic, cold, and focused. Let’s see what they do to it.

This concern isn’t overly paranoid or unwarranted: it already happened with iOS 6 Maps. Sure, there’s now a Google Maps app that you can download separately, but most iOS users aren’t likely to bother, and it can never be as deeply integrated into other parts of iOS as Apple’s native Maps service. ↩︎

In this episode of our casual car podcast: LaFerrari, hybrid supercars, modern car complexity, electric vehicles’ future range, aspirational SUVs, and our dream cars. (Bonus at the end: What does John Siracusa drink?)

Sponsored by Squarespace: Use code NEUTRAL3 at checkout for 10% off.

When will the press stop listening to anything Eric Schmidt says?

Maybe right after developers start writing for Android before iOS and Google TV is in the majority of new TVs by last summer.

Or maybe when young adults are granted free name changes to distance themselves from their teenage social posts that Google keeps forever. (This one’s at least a real problem, although his proposed solution would be both comical and ineffective.)

I usually agree with Om Malik, but not this time, on why he won’t use Google Keep, Google’s new Evernote clone:

It might actually be good, or even better than Evernote. But I still won’t use Keep. You know why? Google Reader.

I spent about seven years of my online life on that service. I sent feedback, used it to annotate information and they killed it like a butcher slaughters a chicken. No conversation — dead. The service that drives more traffic than Google+ was sacrificed because it didn’t meet some vague corporate goals; users — many of them life long — be damned.

Looking from that perspective, it is hard to trust Google to keep an app alive. What if I spend months using the app, and then Google decides it doesn’t meet some arbitrary objective?

In this business, you can’t count on anything having longevity. To avoid new services that are likely to get shut down within a few years, you’d have to avoid every new tech product. Products and services lasting more than a few years are the exception, not the rule.

And unfortunately for users, Google doesn’t owe anyone a “conversation” about what they do with their products. Companies can do whatever they want. They could shut down Gmail tomorrow if it made business sense. There wouldn’t be a conversation.

Users have no power.

We can complain about Google Reader’s shutdown and start as many online petitions as we think will make a difference,1 but we all have short memories and can’t resist free stuff.

Want to really stick it to them? Stop using Google. All of it. Search, Gmail, Maps, the works. Delete your account and start using Bing. Ready?

…

Yeah. That’s the problem. You won’t. I won’t. Nobody will.

It’s not just Google. Everyone does this. Facebook introduces some giant privacy invasion or horrible redesign every six months, then when its users “revolt”, they roll back some of it, and everyone forgets about it because they got what they wanted: Facebook still accomplished most of what they wanted to do, and the users feel like they matter. Almost nobody left Twitter when they started screwing over their API developers, almost nobody switches away from iOS when Apple controversially rejects an app, and almost nobody stopped going to your corner deli after they raised the price of your favorite Thursday sandwich.

They’re not all evil or mean — business owners often need to make decisions that anger some people. That’s the nature of business, government, parenthood, and life. Facebook and Google need to collect more of your personal data and keep you on their sites longer so they can keep increasing their ad revenue. Twitter needs to take control of its product and its users’ attention away from third-party clients so it doesn’t become an unprofitable dumb pipe like AOL Instant Messenger. Apple needs to keep the App Store locked down so people feel safe buying apps (and more iOS devices) and developers are forced to use Apple’s services, APIs, and stores. Your corner deli needed to raise the price of your favorite Thursday sandwich because their health-insurance premium increased by 10% this year. The deli’s health-insurance company needed to raise premiums by 10% because that’s the most that New York State would permit, and how would the insurance company’s CEO justify not taking that opportunity for more profits to the board and shareholders?

That’s business.

Google Keep will probably get as many users as Google’s other low-priority side projects: not many. They’ll probably shut it down within two years. A few people will complain then, too, and they’ll be powerless then, too, but they’ll keep using Google’s stuff after that, too.

Users need to be less trusting of specific products, services, and companies having too much power over their technical lives, jobs, and workflows.

In this business, expect turbulence. And this is going to be increasingly problematic as (no turbulence pun intended) we move so much more to “the cloud”, which usually means services controlled by others, designed to use limited or no local storage of your data.

Always have one foot out the door. Be ready to go.

This isn’t cynical or pessimistic: it’s realistic, pragmatic, and responsible.

If you use Gmail, what happens if Google locks you out of your account permanently and without warning? (It happens.) What if they kill IMAP support and you rely on it? Or what if they simply start to suck otherwise? How easily can you move to a different email host?2 How much disruption will it cause in your workflow? Does your email address end in @gmail.com? What would have happened if we all switched to Wave? What happens if Facebook messages replace email for most people?

Proprietary monocultures are so harmful because they hinder or prevent you from moving away.

This is why it’s so important to keep as much of your data as possible in the most common, widespread, open-if-possible formats, in local files that you can move, copy, and back up yourself.3 And if you care about developing a long-lasting online audience or presence, you’re best served by owning your identity as much as possible.

Investing too heavily in someone else’s proprietary system for too long rarely ends gracefully, but when it bites us, we have nobody to blame but ourselves.

Has an online petition ever effected change? (The vague, unquantifiable, feel-good fallback of “raising awareness” doesn’t count.) ↩︎

This is why I don’t use Gmail. ↩︎

I don’t mind using Dropbox because it’s compatible with responsible practices. If Dropbox goes away, you still have a folder full of files, and there will always be other ways to sync folders full of files between computers. Even if Dropbox’s client somehow screws up and deletes all of its files, you can just restore them from a backup, because it’s just a folder full of regular files on your computer.

When most “cloud” companies or proprietary platforms cease to exist, they fall out of the sky like a plane without power, and everything is lost. Dropbox failing or ceasing to exist would be more like a train losing power: it stops moving, but everything’s still there and everyone’s fine, albeit mildly inconvenienced. ↩︎

This week’s podcast: Buying a TV, regular people and high-DPI screens, the amazing Mac lineup that we barely care about, revisiting the Microsoft Store, and why Garageband’s support of Audiobus is so interesting.

Sponsored by Squarespace: Use code ATP3 at checkout for 10% off.

Om Malik, continuing the conversation by clarifying his point:

It is hard to trust Google anymore to make rational and consumer centric decisions. I said — nuanced as it might be — that I don’t trust Google to introduce new apps and keep them around, because despite what the company says, these apps are not their main business. Their main business is advertising and search — regardless of whatever nonsense you might read. …

I am far more likely to believe in and use products that are the main focus of the company behind them. Online storage? Dropbox. Time-shifting web content? … Instapaper. Short form communication? Twitter. Baby pictures and wedding photos to make single people miserable (or happy for being single)? Facebook.

The point is that a company whose main focus is a specific service or a singular product, like Evernote, is far more likely to focus its energies to build a business around it and keep it around.

It’s a good distinction to make. I’m more conservative about what I invest my time into and who I trust with my data.

I didn’t even realize many of these were dead.

Nick Bilton reports that the FAA plans to permit reading devices during takeoff and landing:

According to people who work with an industry working group that the Federal Aviation Administration set up last year to study the use of portable electronics on planes, the agency hopes to announce by the end of this year that it will relax the rules for reading devices during takeoff and landing. The change would not include cellphones.

Any progress would be nice, but this is a weird distinction: “reading devices” are OK, but phones aren’t? The huge-phone/small-tablet markets are converging as we speak. Are 5-inch “phablets” considered phones or reading devices?

Is the Kindle Fire a reading device since it’s named “Kindle”, even though it can do a lot more? Are iPads reading devices? How about an iPad Mini with an LTE radio? I assume iPhones would be prohibited as “cellphones”, but what about iPod Touches?

This silly distinction will only cause problems. Why attempt to confusingly and ineffectively draw that line?

If the distinction is about cellular radios, what about Kindles and iPads with 3G? And if the distinction is to prevent passengers from annoying each other by talking on the phone, does it also prohibit using Skype or FaceTime on a non-“phone” device?1

Why call out the use-case of “reading”, specifically? What about gaming devices? Media players? Can I read on a laptop if I don’t use the tray table? If devices with keyboards aren’t allowed, would a Surface Pro be permitted? Are flight attendants prepared to enforce and keep up with these distinctions?

If you’re using an iPad, must you be reading during taxi, takeoff, and landing instead of watching a movie or playing Super Stickman Golf 2? Am I allowed to listen to Phish in my headphones while I’m reading? If not, are audiobooks or screen-readers allowed, or are we discriminating against the visually impaired?

Would I be permitted to be productive at all, or is only consumption allowed? Could I write? Code? Draw? Compose? Run some reports? Reboot a server? Why specifically make an exception for reading with everything our modern devices can do?

Last year, the agency announced that an industry working group would study the issue. The group, which first met in January, comprises people from various industries, including Amazon, the Consumer Electronics Association, Boeing, the Association of Flight Attendants, the Federal Communications Commission and aircraft makers.

Oh. (Emphasis mine.)

If reducing annoyance is a goal, why not lock the seats and prohibit tuna sandwiches? ↩︎

The Onion:

“Yeah, of course gay men and women can get married. Who gives a shit?” said Chief Justice John Roberts, who interrupted attorney Charles Cooper’s opening statement defending Proposition 8, which rescinded same-sex couples’ right to marry in California. “Why are we even seriously discussing this?”

MG Siegler:

I can’t help but get the feeling that the ramifications of Google killing off Reader are going to be far more wide-reaching than they may appear at first glance.

… I think it’s just as likely that a large amount of those regular visitors go away and never come back.

I’m a little worried about this possibility. Short-term, it will definitely be a problem. Long-term is the question.

With the decreasing use of RSS readers over the last few years, which will probably be accelerated by Google Reader’s shutdown in July, many are bidding good riddance to a medium that they never used well.

RSS is easy to abuse. In 2011, I wrote Sane RSS usage:

You should be able to go on a disconnected vacation for three days, come back, and be able to skim most of your RSS-item titles reasonably without just giving up and marking all as read. You shouldn’t come back to hundreds or thousands of unread articles.

Yet that’s the most common complaint I hear about inbox-style RSS readers such as Google Reader, NetNewsWire, and Reeder: that people gave up on them because they were constantly filled with more unread items than they could handle.

If you’ve had that problem, you weren’t using inbox-style RSS readers properly. Abandoning the entire idea of the RSS-inbox model because of inbox overload is like boycotting an all-you-can-eat buffet forever because you once ate too much there.

As I said in that 2011 post:

RSS is best for following a large number of infrequently updated sites: sites that you’d never remember to check every day because they only post occasionally, and that your social-network friends won’t reliably find or link to.

Building on that, you shouldn’t accumulate thousands of unread items, because you shouldn’t subscribe to feeds that would generate that kind of unread volume.

If a site posts many items each day and you barely read any of them, delete that feed. If you find yourself hitting “Mark all as read” more than a couple of times for any feed, delete that feed. You won’t miss anything important. If they ever post anything great, enough people will link to it from elsewhere that you’ll still see it.

The true power of the RSS inbox is keeping you informed of new posts that you probably won’t see linked elsewhere, or that you really don’t want to miss if you scroll past a few hours of your Twitter timeline.

If you can’t think of any sites you read that fit that description, you should consider broadening your horizons. (Sorry, I can’t think of a nicer way to put that.)

Some of my RSS subscriptions that my Twitter people usually don’t link to: The Brief, xkcd’s What If, Bare Feats, Dan’s Data (and his blog), ignore the code, Joel on Software, One Foot Tsunami, NSHipster, Programming in the 21st Century, Neglected Potential, Collin Donnell, Squashed, Coyote Tracks, Mueller Pizza Lab, Best of MetaFilter, The Worst Things For Sale.

Many are interesting. Many are for professional development. Some are just fun.

But none of them update frequently enough that I’d remember to check them regularly. (I imagine many of my RSS subscribers would put my site on their versions of this list.) If RSS readers go away, I won’t suddenly start visiting all of these sites — I’ll probably just forget about most of them.

It’s not enough to interleave their posts into a “river” or “stream” paradigm, where only the most recent N items are shown in one big, combined, reverse-chronological list (much like a Twitter timeline), because many of them would get buried in the noise of higher-volume feeds and people’s tweets. The fundamental flaw in the stream paradigm is that items from different feeds don’t have equal value: I don’t mind missing a random New York Times post, but I’ll regret missing the only Dan’s Data post this month because it was buried under everyone’s basketball tweets and nobody else I follow will link to it later.

Without RSS readers, the long tail would be cut off. The rich would get richer: only the big-name sites get regular readership without RSS, so the smaller sites would only get scraps of occasional Twitter links from the few people who remember to check them regularly, and that number would dwindle.

Granted, this problem is mostly concentrated in the tech world where RSS readers really took off. But the tech world is huge, and it’s the world we’re in.

In a world where RSS readers are “dead”, it would be much harder for new sites to develop and maintain an audience, and it would be much harder for readers and writers to follow a diverse pool of ideas and source material. Both sides would do themselves a great disservice by promoting, accelerating, or glorifying the death of RSS readers.

Say hello to Rockland, the latest update to Igloo’s cloud intranet platform.

Igloo is now sporting responsive design. Your intranet design can be fully optimized for almost any device you’re using (though we haven’t tested it on the Tesla Model S). Create content, download documents — almost everything you can do on your desktop, you can do on your phone or tablet iPad. Admins can even update CSS, user permissions, and page layouts right from their iPhones.

We’ve also built a multi-channel widget, allowing you to create custom views into multiple calendars, blogs, or forums in one spot. It’s like a shared corporate calendar on buttered coffee.

There are updgrades everywhere: our calendar now supports Outlook (or Gmail) reminders, we’ve added two new language packs (Italian and German. Guten tag.), better timezone support, search updates, security improvements, and even a new mobile app dedicated to finding the latest information fast.

Learn more about Rockland or sign up to try Igloo today.

Thanks to Igloo Software for sponsoring Marco.org this week.

Previously, I had never found a weather website anywhere near as good as weather apps on iOS. Weather sites have always been so terrible that I’d always just check the weather on my phone, even when sitting at my computer, because the thought of going to a weather site was so unappealing.

Now, I use Forecast. They were nice enough to let me beta-test the site, and it’s great.

Emin Gün Sirer:

Let’s leave aside three unrelated factoids, namely, the Summly founder is only 17 years old, the company was in existence for only 18 months and Yahoo reportedly paid $30M for it. …

I want to first focus on this from a technologist’s perspective, and there is only one germane fact: the company developed no Natural Language Processing technology of its own.

They licensed the core engine from another company.

A lot of geeks have been yelling today about the Summly acquisition, which is really a textbook acqui-hire: a startup that appeared to be missing its growth targets was acquired for a fairly modest price and immediately shut down by a big tech company in need of good talent.

I don’t know if Emin’s assertion about the tech is correct. We barely know anything about Summly because the press has utterly failed at telling us anything useful about it.

All we really know is that Nick D’Aloisio is young. He started the company when he was 15. Did I mention he’s young? And he started a company. And he was only 15!

Now he’s a millionaire, and he’s only 17! Can you believe that? A tech millionaire! He’s only 17! And he must be smart. After all, he started the company when he was only 15. Young, smart, and now rich! What will his girlfriend think? (Really. Shameful.)

All we know about Summly itself is that it summarized web articles into three bullet points that occasionally made sense. And I only know that because I tried it while D’Aloisio was barraging me with manipulative, fake-“URGENT” emails much like these in late 2011. The summaries weren’t good enough for me to try more than a few times. But the press never talked about the actual product in their vapid articles about Nick D’Aloisio’s youth.

In the early days of Tumblr, David Karp didn’t tell anyone his age. He hired me when he was 19, and I worked for him for over a year before I learned that — and even then, I only knew because he let it slip in an interview. He wanted to keep it quiet for as long as he could because he knew that as soon as it got out, every story about Tumblr would just be about David’s youth.

He was right. After that, he’d give hours-long interviews with big newspaper and magazine writers, trying to tell them all about the product, and the article would come out as a vapid fluff piece about how young he was. It took years for Tumblr to (mostly) get out from under that image in the press.

In this episode: Replacing expensive built-in options with Automatic, low expectations for built-in car software, Mini cars, and targeting young buyers.

Sponsored by Squarespace: Use code NEUTRAL3 at checkout for 10% off.

Next week, we’ll be off as Casey and I drive my new car in Germany. Neutral’s final episode will be the following week, April 11, wrapping up this podcast mini-series. Thanks, everyone! It’s been fun. (ATP will continue.)

This week’s issue of The Magazine is special: instead of running five articles of our typical length (about 1,500 words), we published two regular-length articles and an amazing, 7,500-word feature article by Pulitzer-winner (!) Eli Sanders, with illustrations by Tom Tomorrow, on the status and future of domestic drones — from hobbyist to government uses — and the potential ramifications to our society.

Since the app experience isn’t ideal for articles this long, we’ve split it into three parts. Here’s part one. Highly recommended.

It’s rumored to be an HTC phone running Facebook’s custom fork of Android full of Facebook apps to do everything. Your Facebook news feed will live in your pants all the time. Who wouldn’t want that?

With deeper control of a modified operating system would come huge opportunities to collect data on its users. Facebook knows that who you SMS and call are important indicators of who your closest friends are. Its own version of Android could give it that info, which could be used to refine everything from what content you’re shown in the news feed to which friends faces are used in ads you see.

Sign me up!

This week’s episode: Summly and Yahoo, retaining good tech talent, and iCloud data sync for apps: why it doesn’t work well, whether it’s fixable, and whether you should use it even if it gets fixed.

Sponsored by Squarespace: Use code ATP3 at checkout for 10% off.